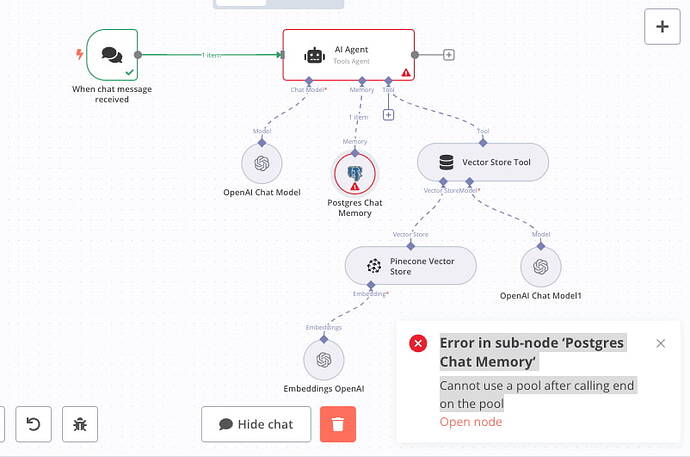

I used the postgres-memory node connected to supabase in n8n and it functions with the first human message, but at the second human message, I get this error:

I have no idea how to solve this problem. I looked in all the settings in supabase and n8n…

# Debug info

## core

- n8nVersion: 1.74.0

- platform: npm

- nodeJsVersion: 22.12.0

- database: postgres

- executionMode: regular

- concurrency: -1

- license: enterprise (production)

- consumerId: 5a5…

## storage

- success: all

- error: all

- progress: false

- manual: true

- binaryMode: memory

## pruning

- enabled: true

- maxAge: 336 hours

- maxCount: 10000 executions

## client

- userAgent: mozilla/5.0 (macintosh; intel mac os x 10_15_7) applewebkit/ (khtml, like gecko) chrome/ safari/

- isTouchDevice: false

Generated at: 2025-01-14T14:04:37.811Z

1 Like

n8n

January 14, 2025, 2:09pm

2

It looks like your topic is missing some important information. Could you provide the following if applicable.

n8n version: Database (default: SQLite): n8n EXECUTIONS_PROCESS setting (default: own, main): Running n8n via (Docker, npm, n8n cloud, desktop app): Operating system:

n8n version: Database (default: SQLite): n8n EXECUTIONS_PROCESS setting (default: own, main): Running n8n via (Docker, npm, n8n cloud, desktop app): Operating system:

vpop

January 15, 2025, 11:40pm

5

This just started happening to me too after getting the latest update. Anyone figure out how to solve?

2 Likes

MattB

January 16, 2025, 5:26am

6

Dear @jan ,

3 Likes

I have the same issue hix

1 Like

Hi guys

1 Like

ria

January 16, 2025, 8:57am

9

Hi guys, good news, we got a fix for this ready to be released. Hopefully will go out this week still.

n8n-io:master ← n8n-io:backport-connection-pooling-to-postgres-v1

opened 11:51AM - 07 Jan 25 UTC

## Summary

<!--

Describe what the PR does and how to test.

Photos and video… s are recommended.

-->

This uses the pool manager for postgres v1, but additionally it also fixes the credential test for postgres which before would shut down the shared pool and lead to `Connection pool of the database object has been destroyed.` on any subsequent execution.

Taken from the [pg-promise docs](https://vitaly-t.github.io/pg-promise/index.html):

> Object db represents the Database protocol with lazy connection, i.e. only the actual query methods acquire and release the connection automatically. Therefore, you should create only one global/shared db object per connection details.

Thus it's not necessary to release connections manually. They are managed by pg-promise.

You can verify this using these queries and constructing a workflow that executes them once a second:

Lists all open connections per db, grouped by state.

```sql

SELECT

datname,

usename,

state,

COUNT(*)

FROM pg_stat_activity

WHERE backend_type = 'client backend'

GROUP BY datname, usename, state;

```

Long running query to simulated load.

```sql

SELECT

g.i,

pg_sleep(0.001) -- each row sleeps for 1ms

FROM generate_series(1, 1000) g(i);

```

By default the pool has a size of 10. If we need more then the next execution hangs until the next connection is free.

That limit is way too low.

I set it to 10_000 to test what happens if I ran out of the 100 connections my local postgres allows:

Also quickly after deactivating the workflow the pool was cleaned up and all 100 connections have been available again.

I would like to set it to unlimited for now, but that's not possible:

https://github.com/brianc/node-postgres/issues/1977

But for that reason I made it configurable in the credential.

## Related Linear tickets, Github issues, and Community forum posts

Fixes #12517

[NODE-2240](https://linear.app/n8n/issue/NODE-2240)

## Review / Merge checklist

- [x] PR title and summary are descriptive. ([conventions](../blob/master/.github/pull_request_title_conventions.md)) <!--

**Remember, the title automatically goes into the changelog.

Use `(no-changelog)` otherwise.**

-->

- [ ] ~[Docs updated](https://github.com/n8n-io/n8n-docs) or follow-up ticket created.~

- [ ] Tests included. <!--

A bug is not considered fixed, unless a test is added to prevent it from happening again.

A feature is not complete without tests.

-->

- [ ] PR Labeled with `release/backport` (if the PR is an urgent fix that needs to be backported)

Sorry for this and thanks for your patience

5 Likes

thank you for your feedback, we hope to fix it ASAP as it affects the productions.

2 Likes

Same. Try to rolling back n8n version

dunx

January 17, 2025, 8:33am

14

which version are you using

ria

January 17, 2025, 10:18am

15

Hi everyone, we’ve just released the fix with version 1.75.1

1 Like

cjuarez

January 17, 2025, 11:30am

16

I have updated to 1.74.2, which is the latest available, and the error persists.

1 Like

Not working for me

Edit: Could you please add an option to downgrade to 1.73.1? That version was working fine

ria

January 17, 2025, 1:18pm

18

Thanks for the feedback. We’re working on it!

For the meantime, if you’re on cloud and want to downgrade to version 1.73.1 please message our n8n support at [email protected]

Thanks for your patience in the meantime!

Thank you very much for being hands-on solving the problem. At the moment, I don’t have any production workflow affected, so I can use Window Buffer Memory while testing other workflows until you solve it. Thank you very much.

Seems to function now after the last update.

Thanks for the great job!