Describe the problem/error/question

Hello n8n Community,

I am working on extracting the specific LLM model name used in my workflow for analytical purposes, but I am encountering some difficulties with accessing the data.

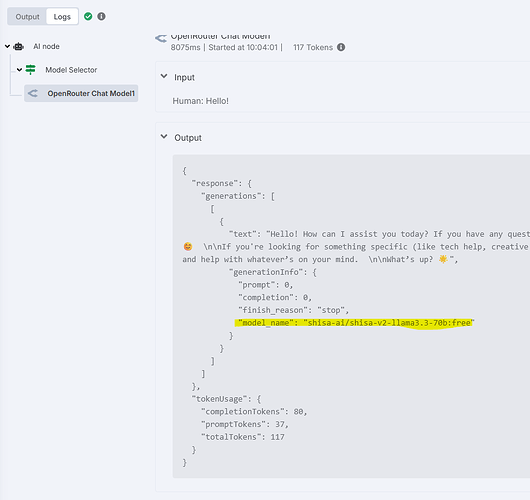

My Setup: I have an AI agent node in my workflow. This AI node contains the model selector sub-node, which in turn contains the actual LLM model node (e.g., “OpenRouter Chat Model1”).

The Problem: I need to retrieve the value of model_name from the LLM response. As shown in the attached screenshot, this model_name is present in the output of the “OpenRouter Chat Model1” sub-node, located at item.json.generations[0].generationInfo.model_name.

The model name, including reference to the subnode, can also be found in the browser console.

![]()

I attempted to access this information using an expression like {{ $('Model Selector').item.json.options.model }}. However, this resulted in the error message: No path back to referenced node There is no connection back to the node 'Model Selector', but it's used in an expression here. Please wire up the node (there can be other nodes in between).

I understand that this error likely indicates that $(NodeName) expressions are designed to reference nodes that are upstream in the direct data flow path, and sub-nodes within a parent node do not typically expose their input/output in a way that allows direct referencing by arbitrary downstream nodes using this syntax. My intention with item.json.options.model was to access a parameter, but the model name is actually in the output.

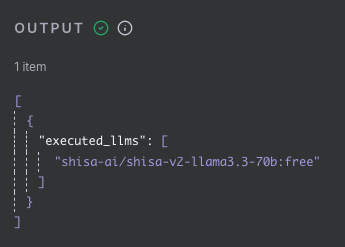

My Goal: I need a reliable and correct n8n expression to extract the model_name (e.g., “shisa-ai/shisa-v2-llama3.3-70b:free”) so I can use it in subsequent nodes for logging or other processing.

What is the error message (if any)?

No path back to referenced node

There is no connection back to the node ‘Model Selector’, but it’s used in an expression here.

Please wire up the node (there can be other nodes in between).

Please share your workflow

Information on your n8n setup

- n8n version: 1.100

- Database (default: SQLite): PostgresQL

- Running n8n via (Docker, npm, n8n cloud, desktop app): Docker

- Operating system: Alpine Linux