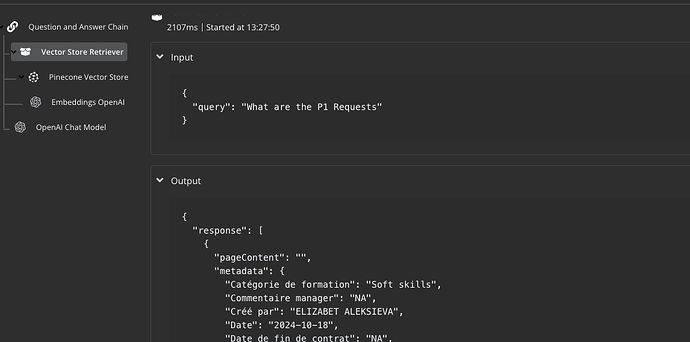

Describe the problem/error/question

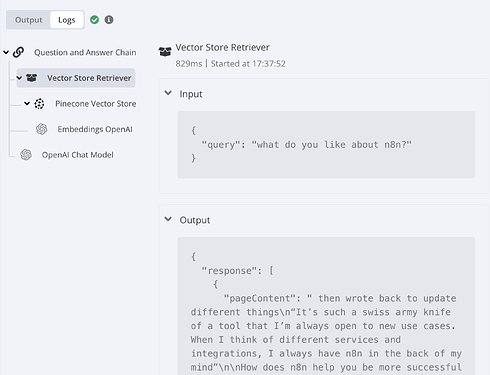

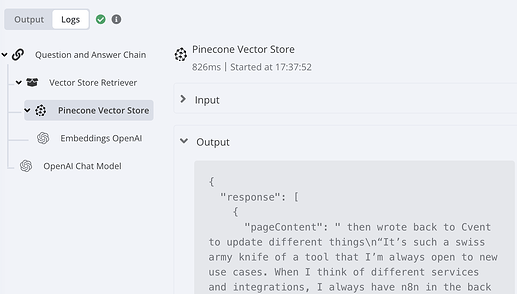

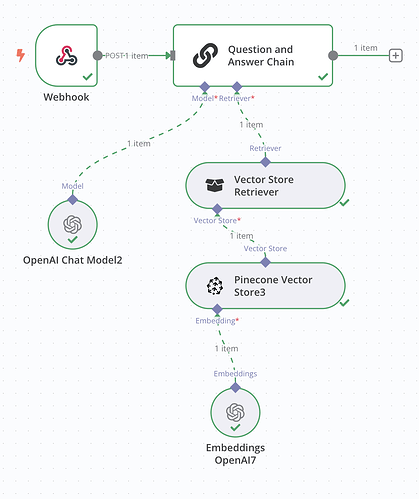

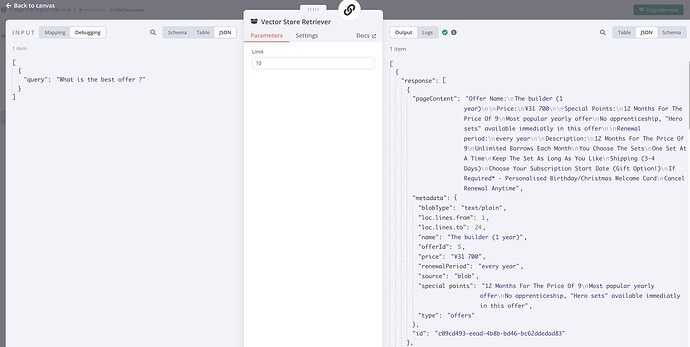

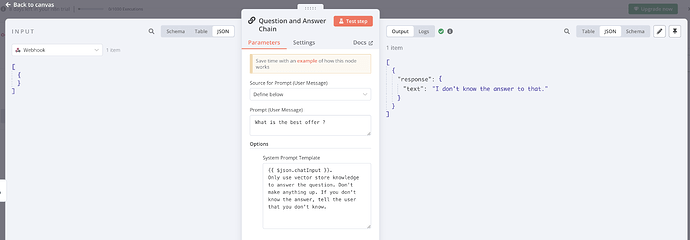

I’m using a simple workflow that uses “Question and answer chain” node that is connected to a Pincone vector store and have a chat message input.

My problem is that i’m getting results from the vector store but the they don’t seem to be used as context for my chat model.

Please share your workflow

{

“nodes”: [

{

“parameters”: {

“model”: “text-embedding-ada-002”,

“options”: {}

},

“type”: “@n8n/n8n-nodes-langchain.embeddingsOpenAi”,

“typeVersion”: 1.2,

“position”: [

120,

620

],

“id”: “24a95124-29b6-4c77-95cc-49389c72935d”,

“name”: “Embeddings OpenAI”,

“credentials”: {

“openAiApi”: {

“id”: “SO1G7Uhoa1vePIWz”,

“name”: “OpenAi account”

}

}

},

{

“parameters”: {

“pineconeIndex”: {

“__rl”: true,

“value”: “c92270a6-5826-4d82-8cae-fd95628ab1a1-requests”,

“mode”: “list”,

“cachedResultName”: “c92270a6-5826-4d82-8cae-fd95628ab1a1-requests”

},

“options”: {

“pineconeNamespace”: “c92270a6-5826-4d82-8cae-fd95628ab1a1-requests”

}

},

“type”: “@n8n/n8n-nodes-langchain.vectorStorePinecone”,

“typeVersion”: 1,

“position”: [

300,

420

],

“id”: “e5ad4537-89f4-4f80-b9de-bb918e47e45c”,

“name”: “Pinecone Vector Store”,

“credentials”: {

“pineconeApi”: {

“id”: “WqIAfFw3vpo6R6Jn”,

“name”: “PineconeApi account”

}

}

},

{

“parameters”: {},

“type”: “@n8n/n8n-nodes-langchain.retrieverVectorStore”,

“typeVersion”: 1,

“position”: [

340,

260

],

“id”: “9b484b04-bad2-419a-9ade-2641a6cfcd43”,

“name”: “Vector Store Retriever”

},

{

“parameters”: {

“options”: {}

},

“type”: “@n8n/n8n-nodes-langchain.chatTrigger”,

“typeVersion”: 1.1,

“position”: [

20,

-20

],

“id”: “806088fa-ac93-4bb1-b7ff-df360cb163b7”,

“name”: “When chat message received”,

“webhookId”: “a73857fd-6d06-4b06-a44b-c1b0059c2a9d”

},

{

“parameters”: {

“options”: {}

},

“type”: “@n8n/n8n-nodes-langchain.chainRetrievalQa”,

“typeVersion”: 1.4,

“position”: [

240,

-20

],

“id”: “2e8e153c-a5c9-4d34-b053-ca1f7d782986”,

“name”: “Question and Answer Chain”

},

{

“parameters”: {

“model”: {

“__rl”: true,

“value”: “gpt-4o”,

“mode”: “list”,

“cachedResultName”: “gpt-4o”

},

“options”: {}

},

“type”: “@n8n/n8n-nodes-langchain.lmChatOpenAi”,

“typeVersion”: 1.2,

“position”: [

160,

200

],

“id”: “6a6b17cb-40f2-4bb5-aefe-9116adc17338”,

“name”: “OpenAI Chat Model”,

“credentials”: {

“openAiApi”: {

“id”: “SO1G7Uhoa1vePIWz”,

“name”: “OpenAi account”

}

}

}

],

“connections”: {

“Embeddings OpenAI”: {

“ai_embedding”: [

[

{

“node”: “Pinecone Vector Store”,

“type”: “ai_embedding”,

“index”: 0

}

]

]

},

“Pinecone Vector Store”: {

“ai_vectorStore”: [

[

{

“node”: “Vector Store Retriever”,

“type”: “ai_vectorStore”,

“index”: 0

}

]

]

},

“Vector Store Retriever”: {

“ai_retriever”: [

[

{

“node”: “Question and Answer Chain”,

“type”: “ai_retriever”,

“index”: 0

}

]

]

},

“When chat message received”: {

“main”: [

[

{

“node”: “Question and Answer Chain”,

“type”: “main”,

“index”: 0

}

]

]

},

“OpenAI Chat Model”: {

“ai_languageModel”: [

[

{

“node”: “Question and Answer Chain”,

“type”: “ai_languageModel”,

“index”: 0

}

]

]

}

},

“pinData”: {},

“meta”: {

“templateCredsSetupCompleted”: true,

“instanceId”: “c52aee3b038b10b2a3e35f4e2636449ac19d523af47b48b8ff439fc46ea9ac62”

}

}

Share the output returned by the last node

Information on your n8n setup

- **n8n version: n8n cloud