Hi Team,

Is it possible to read all data inside a google sheet and pass it all to MySQL Database? If it’s possible, can you provide a sample setup?

Thanks

Hi Team,

Is it possible to read all data inside a google sheet and pass it all to MySQL Database? If it’s possible, can you provide a sample setup?

Thanks

Hi @daniel.o, welcome to the community!

This should be fairly straightforward. Assuming both your Google Sheet and MySQL table have identical column names you can simply connect the Google Sheet node (reading your data) to the MySql node (inserting your data).

If the columns don’t match exactly you’d need to add a Rename Keys or Set node to the mix. Here’s an example workflow:

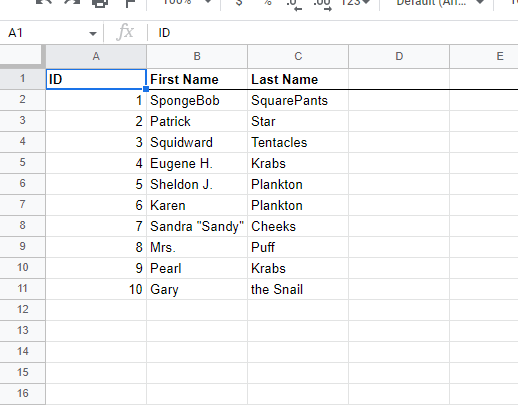

This workflow will read a Google Sheet like below, then rename the columns to match the columns in my database.

The MySQL node will return the result following the insert operation:

Thank you for this response. Forgive me, what I want to accomplish is read all data inside a google sheet, delete a table from database and re-create that table and add all data to that table?

This should work pretty similar to the above. Simply add additional MySQL nodes at the start of your workflow running the queries dropping your existing table and creating a new one.

Can you give me a sample query. I’ve tried it my own but not working after executing the node.

Which problem exactly are you seeing?

Wait let me create a recording

I don’t know why but it keeps on loading. Here is the video recording link: https://cast.obi.services/conversations/3a29b418-500f-54a5-83b1-56159f87b98a

Can you just provide a sample setup?

Here’s the recording that I received the successful message after execution of the query dropping the table but as I take a look at the database, the table is still not deleted at all.

https://cast.obi.services/conversations/090660ba-a797-565d-8bc1-0e4fc30783d1

Hey, so I just tested this on my end using the following docker compose environment:

version: '3.1'

services:

n8n:

image: n8nio/n8n:0.197.1

ports:

- 5678:5678

volumes:

- ./n8n_data:/home/node/.n8n

mysql:

image: docker.io/library/mariadb:10.9

ports:

- 3306:3306

environment:

- MYSQL_ROOT_PASSWORD=SeCrEt1234

volumes:

- ./mysql_data:/var/lib/mysql

adminer:

image: adminer

ports:

- 8080:8080

This is my current database content:

This is the workflow I am executing:

This is the database content after the workflow execution:

As you can see the users table I was using for testing purposes is gone.

So it very much seems the reason this doesn’t work on your side would be with your specific environment. Perhaps missing permissions to drop a table? Might be worth investigating this with your database admin.

Thank you, my flow is now fully working. Just one question, does n8n have email scraping?

Thanks

Hi @daniel.o, glad to hear this is working, thanks for confirming! Looks like your latest question is a duplicate of Email Scraping in n8n, so I’ll close this thread over here.