### Bug Description

I installed self-hosted n8n on a Windows 10 system via npm.…

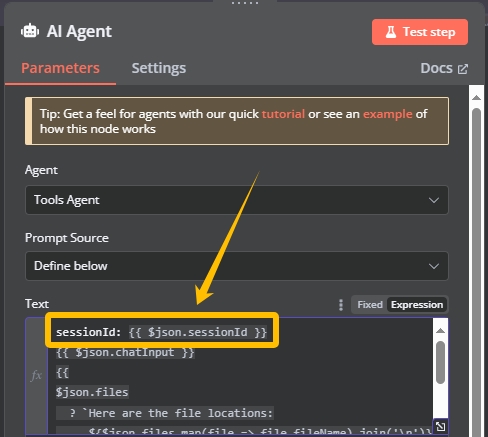

I used a chat trigger to upload a docx file and then wrote the file to the "abc" folder. However, the file name is incorrect as the variable inside {{}} was not parsed. This can be clearly seen in the video below.

https://github.com/user-attachments/assets/fecae78d-f5b9-4bbf-9361-80e961779ca1

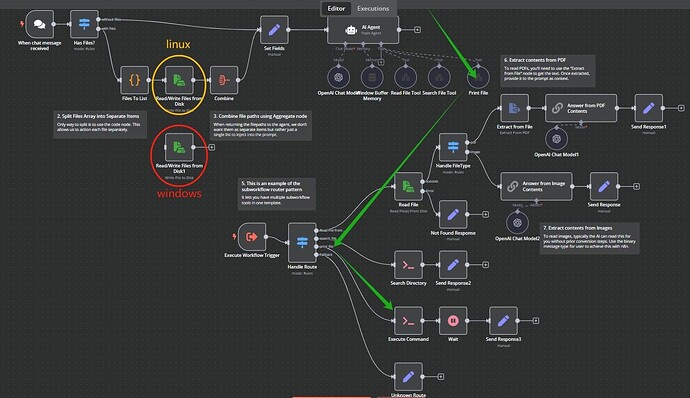

workflow config:

```

{

"nodes": [

{

"parameters": {},

"id": "63d2bf70-2d86-4862-8020-702bfea5ce3f",

"name": "Window Buffer Memory",

"type": "@n8n/n8n-nodes-langchain.memoryBufferWindow",

"typeVersion": 1.2,

"position": [

520,

80

]

},

{

"parameters": {

"public": true,

"initialMessages": "upload file",

"options": {

"allowFileUploads": true,

"responseMode": "lastNode",

"showWelcomeScreen": false

}

},

"id": "37b26983-0f0c-4cfa-a7b7-511d7ba377a8",

"name": "When chat message received",

"type": "@n8n/n8n-nodes-langchain.chatTrigger",

"typeVersion": 1.1,

"position": [

-20,

-140

],

"webhookId": "1c1f408c-25b5-4776-a3cf-da267611b299"

},

{

"parameters": {

"options": {

"baseURL": "https://oneapi.6868ai.com/v1",

"temperature": 0.3

}

},

"id": "a267a5d5-ac9a-4fb2-88de-bfed809ca9d3",

"name": "OpenAI",

"type": "@n8n/n8n-nodes-langchain.lmChatOpenAi",

"typeVersion": 1,

"position": [

380,

80

],

"notesInFlow": false,

"credentials": {

"openAiApi": {

"id": "iuBjR0mRLNV8iloe",

"name": "OpenAi account"

}

}

},

{

"parameters": {

"operation": "write",

"fileName": "=C:\\\\Users\\\\Administrator\\\\Desktop\\\\abc\\\\{{ $json.files[0].fileName}}",

"dataPropertyName": "=data0",

"options": {}

},

"type": "n8n-nodes-base.readWriteFile",

"typeVersion": 1,

"position": [

240,

-140

],

"id": "f8657482-a31d-4f4e-82f3-8f133b067615",

"name": "Read/Write Files from Disk1"

},

{

"parameters": {

"promptType": "define",

"text": "=uploadFile: {{ $json.fileName}}\nchatInput: {{ $json.chatInput }}\nsessionId: {{ $json.sessionId }}",

"options": {}

},

"type": "@n8n/n8n-nodes-langchain.agent",

"typeVersion": 1.7,

"position": [

460,

-140

],

"id": "72166ca4-d01a-41a5-a993-4647e178ed1c",

"name": "AI Agent1"

}

],

"connections": {

"Window Buffer Memory": {

"ai_memory": [

[

{

"node": "AI Agent1",

"type": "ai_memory",

"index": 0

}

]

]

},

"When chat message received": {

"main": [

[

{

"node": "Read/Write Files from Disk1",

"type": "main",

"index": 0

}

]

]

},

"OpenAI": {

"ai_languageModel": [

[

{

"node": "AI Agent1",

"type": "ai_languageModel",

"index": 0

}

]

]

},

"Read/Write Files from Disk1": {

"main": [

[

{

"node": "AI Agent1",

"type": "main",

"index": 0

}

]

]

}

},

"pinData": {}

}

```

### To Reproduce

1. copy config

2. run it

### Expected behavior

The variable inside {{}} should be parsed correctly.

### Operating System

self-hosted n8n on a Windows 10

### n8n Version

1.70.1

### Node.js Version

20.18.0

### Database

SQLite (default)

### Execution mode

main (default)