Thanks for the workaround @miguel-mconf. I was able to add the Code node this way. However, the code does not return any prompt or completion tokens. I’m using OpenAI’s gpt-4o-mini model. Do you know what I need to change in the code to be able to see the estimated token usage?

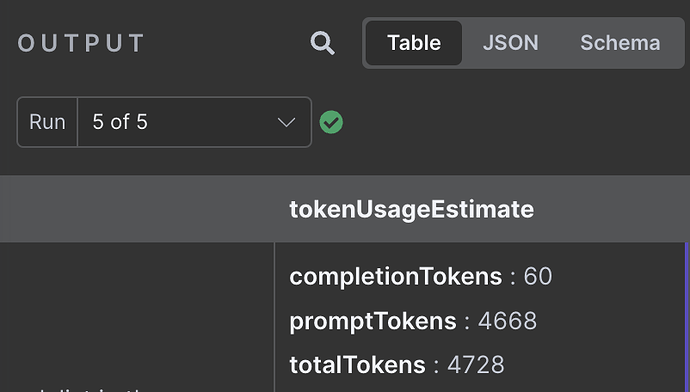

The output of the OpenAI chat model when connected directly to the AI Agent is certainly showing this usage:

But when I add the Code node in between the AI Agent and the chat model, the OpenAI chat model and the Code node don’t show any output.