Hey Buddies!

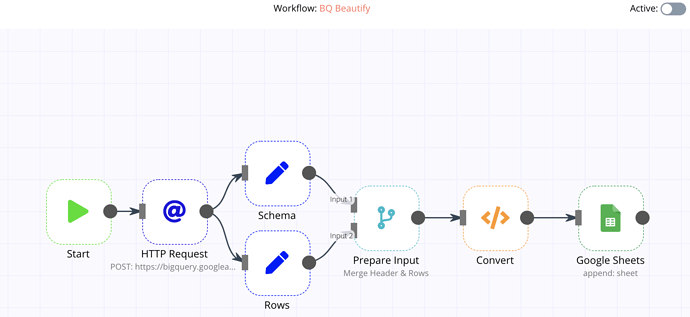

Easy way how to send your own pretty customised Job to big query and save answer to Google Sheet.

1. HTTP Request

URL (don’t forget edit your {Your project ID})

https://bigquery.googleapis.com/bigquery/v2/projects/{Your project ID}/queries

JSON/RAW Parameters

true

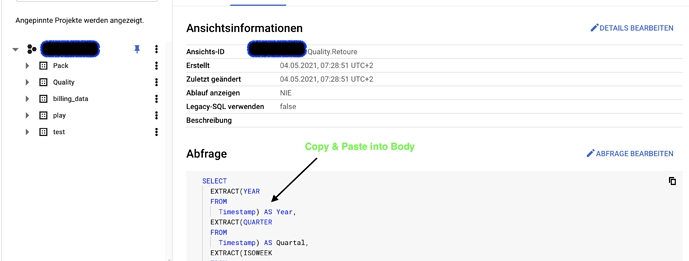

Tip: You can simply Copy&Paste your Queries from Big Query Console ![]()

Job/Query (paste into Body Parameters and don’t forget edit your location e.g.

Body Parameters:

{“query”: “Your Query”,

“location”: “europe-west3”,

“useLegacySql”: false,

“useQueryCache”: true}

2. SET Schema

Keep only Set

true

Value to Set: String

Name:schema

Value:{{$json["data"]["schema"]}}

3. SET Rows

Keep only Set

true

Value to Set: String

Name:rows

Value:{{$json["data"]["rows"]}}

4. Merge

Mode:

Merge By Index

Join:Inner Join

5. convert (Credits an Jan)

JavaScript Code:

function convertBQToMySQLResults(schema, rows) {

var resultRows = []

function recurse (schemaCur, rowsCur, colName) {

if (Array.isArray(schemaCur) && !Array.isArray(result[colName])) {

for(var i=0, l=schemaCur.length; i<l; i++) {

if (colName === "")

recurse(schemaCur[i], rowsCur.f[i], colName + schemaCur[i].name)

else

recurse(schemaCur[i], rowsCur.f[i], colName + "." + schemaCur[i].name)

}

}

if (schemaCur.type && schemaCur.type === "RECORD") {

if (schemaCur.mode !== "REPEATED") {

var valIndex = 0

for (var p in schemaCur.fields) {

if (rowsCur.v === null) {

recurse(schemaCur.fields[p], rowsCur, colName + "." + schemaCur.fields[p].name)

} else {

recurse(schemaCur.fields[p], rowsCur.v.f[valIndex], colName + "." + schemaCur.fields[p].name)

}

valIndex++

}

}

if (schemaCur.mode === "REPEATED") {

result[colName] = []

for (var x in rowsCur.v) {

recurse(schemaCur.fields, rowsCur.v[x], colName)

}

}

} else {

if (schemaCur.mode === "REPEATED") {

if (rowsCur.v !== null) {

result[colName] = rowsCur.v.map( (value, index) => { return value.v })

} else {

result[colName] = [ null ]

}

} else if (Array.isArray(result[colName])) {

let nextRow = {}

for (var j in schemaCur) {

nextRow[colName + "." + schemaCur[j].name] = Array.isArray(rowsCur.v.f[j].v) ? rowsCur.v.f[j].v.map( (value, index) => { return value.v }) : rowsCur.v.f[j].v

}

result[colName].push(nextRow)

} else {

if (colName !== "")

result[colName] = rowsCur.v

}

}

}

for (var r=0, rowsCount=rows.length; r<rowsCount; r++) {

var result = {};

recurse(schema, rows[r], "")

resultRows.push(result)

}

return resultRows

}

const rows = convertBQToMySQLResults(items[0].json.schema.fields, items[0].json.rows);

return rows.map(row => ({json: row}));

6. Save to Sheet

Background

Im Neewbie with n8n and this is my first Concept how to use it. Your Feedbacks are welcome. I personally use it to automatise whole Processing.

- My Co-workers put daily Data into Sheets.

- Then they start AppScript and Data are uploaded automatically to BigQuery.

- After success, they call this n8n Scenario via Webhook/HTTP Request (via AppScript)

- n8n do this great Job and my colleagues receive just nice updated Sheet

Workflow code - (Copy& Paste (ctrl + v or cmd + v) anywhere in the n8n window)

{

"nodes": [

{

"parameters": {},

"name": "Start",

"type": "n8n-nodes-base.start",

"typeVersion": 1,

"position": [

210,

300

]

},

{

"parameters": {

"authentication": "oAuth2",

"requestMethod": "POST",

"url": "https://bigquery.googleapis.com/bigquery/v2/projects/[Your-Project-ID]/queries",

"responseFormat": "string",

"jsonParameters": true,

"options": {},

"bodyParametersJson": "={\"query\": \"Your Query\", \"location\": \"europe-west3\", \"useLegacySql\": false, \"useQueryCache\": true}"

},

"name": "HTTP Request",

"type": "n8n-nodes-base.httpRequest",

"typeVersion": 1,

"position": [

360,

300

],

"credentials": {

"oAuth2Api": "Slavomir BQ"

}

},

{

"parameters": {

"keepOnlySet": true,

"values": {

"string": [

{

"name": "schema",

"value": "={{$json[\"data\"][\"schema\"]}}"

}

]

},

"options": {}

},

"name": "Schema",

"type": "n8n-nodes-base.set",

"typeVersion": 1,

"position": [

520,

240

]

},

{

"parameters": {

"keepOnlySet": true,

"values": {

"string": [

{

"name": "rows",

"value": "={{$json[\"data\"][\"rows\"]}}"

}

]

},

"options": {}

},

"name": "Rows",

"type": "n8n-nodes-base.set",

"typeVersion": 1,

"position": [

520,

380

]

},

{

"parameters": {

"operation": "append",

"sheetId": "your sheet",

"range": "test!A:E",

"options": {}

},

"name": "Google Sheets",

"type": "n8n-nodes-base.googleSheets",

"typeVersion": 1,

"position": [

1040,

310

],

"credentials": {

"googleApi": "Api-Bot"

}

},

{

"parameters": {

"mode": "mergeByIndex",

"join": "inner"

},

"name": "Prepare Input",

"type": "n8n-nodes-base.merge",

"typeVersion": 1,

"position": [

690,

310

],

"notesInFlow": true,

"notes": "Merge Header & Rows"

},

{

"parameters": {

"functionCode": "function convertBQToMySQLResults(schema, rows) {\n\n var resultRows = []\n \n function recurse (schemaCur, rowsCur, colName) {\n\n if (Array.isArray(schemaCur) && !Array.isArray(result[colName])) {\n for(var i=0, l=schemaCur.length; i<l; i++) {\n if (colName === \"\")\n recurse(schemaCur[i], rowsCur.f[i], colName + schemaCur[i].name)\n else\n recurse(schemaCur[i], rowsCur.f[i], colName + \".\" + schemaCur[i].name)\n } \n }\n\n if (schemaCur.type && schemaCur.type === \"RECORD\") {\n if (schemaCur.mode !== \"REPEATED\") {\n var valIndex = 0\n for (var p in schemaCur.fields) {\n if (rowsCur.v === null) {\n recurse(schemaCur.fields[p], rowsCur, colName + \".\" + schemaCur.fields[p].name)\n } else {\n recurse(schemaCur.fields[p], rowsCur.v.f[valIndex], colName + \".\" + schemaCur.fields[p].name)\n }\n \n valIndex++\n }\n } \n \n if (schemaCur.mode === \"REPEATED\") { \n result[colName] = [] \n for (var x in rowsCur.v) {\n recurse(schemaCur.fields, rowsCur.v[x], colName)\n }\n }\n } else {\n if (schemaCur.mode === \"REPEATED\") {\n if (rowsCur.v !== null) {\n result[colName] = rowsCur.v.map( (value, index) => { return value.v })\n } else {\n result[colName] = [ null ]\n }\n \n } else if (Array.isArray(result[colName])) {\n let nextRow = {} \n for (var j in schemaCur) {\n nextRow[colName + \".\" + schemaCur[j].name] = Array.isArray(rowsCur.v.f[j].v) ? rowsCur.v.f[j].v.map( (value, index) => { return value.v }) : rowsCur.v.f[j].v \n }\n result[colName].push(nextRow)\n } else {\n if (colName !== \"\")\n result[colName] = rowsCur.v\n }\n }\n }\n\n for (var r=0, rowsCount=rows.length; r<rowsCount; r++) {\n var result = {};\n recurse(schema, rows[r], \"\")\n resultRows.push(result)\n }\n\n return resultRows\n}\n\nconst rows = convertBQToMySQLResults(items[0].json.schema.fields, items[0].json.rows);\nreturn rows.map(row => ({json: row}));"

},

"name": "Convert",

"type": "n8n-nodes-base.function",

"typeVersion": 1,

"position": [

860,

310

]

}

],

"connections": {

"Start": {

"main": [

[

{

"node": "HTTP Request",

"type": "main",

"index": 0

}

]

]

},

"HTTP Request": {

"main": [

[

{

"node": "Schema",

"type": "main",

"index": 0

},

{

"node": "Rows",

"type": "main",

"index": 0

}

]

]

},

"Schema": {

"main": [

[

{

"node": "Prepare Input",

"type": "main",

"index": 0

}

]

]

},

"Rows": {

"main": [

[

{

"node": "Prepare Input",

"type": "main",

"index": 1

}

]

]

},

"Prepare Input": {

"main": [

[

{

"node": "Convert",

"type": "main",

"index": 0

}

]

]

},

"Convert": {

"main": [

[

{

"node": "Google Sheets",

"type": "main",

"index": 0

}

]

]

}

}

}