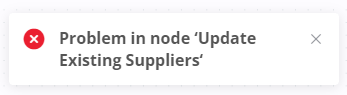

I am using the Microsoft SQL node to do some updates and encountered an error from the UI:

I have seen the related issue: PayloadTooLargeError: request entity too large for 100kb request which talks about limits on (input) file sizes and also on Request Size. I guess I am breaching the size of the request but my input file is < 1MB. It contains 9736 records.

On digging into the logs, I found:

PayloadTooLargeError: request entity too large

at readStream (/usr/local/lib/node_modules/n8n/node_modules/raw-body/index.js:156:17)

at getRawBody (/usr/local/lib/node_modules/n8n/node_modules/raw-body/index.js:109:12)

at read (/usr/local/lib/node_modules/n8n/node_modules/body-parser/lib/read.js:79:3)

at jsonParser (/usr/local/lib/node_modules/n8n/node_modules/body-parser/lib/types/json.js:135:5)

at Layer.handle [as handle_request] (/usr/local/lib/node_modules/n8n/node_modules/express/lib/router/layer.js:95:5)

at trim_prefix (/usr/local/lib/node_modules/n8n/node_modules/express/lib/router/index.js:328:13)

at /usr/local/lib/node_modules/n8n/node_modules/express/lib/router/index.js:286:9

at Function.process_params (/usr/local/lib/node_modules/n8n/node_modules/express/lib/router/index.js:346:12)

at next (/usr/local/lib/node_modules/n8n/node_modules/express/lib/router/index.js:280:10)

at /usr/local/lib/node_modules/n8n/dist/src/Server.js:364:13

at Layer.handle [as handle_request] (/usr/local/lib/node_modules/n8n/node_modules/express/lib/router/layer.js:95:5)

at trim_prefix (/usr/local/lib/node_modules/n8n/node_modules/express/lib/router/index.js:328:13)

at /usr/local/lib/node_modules/n8n/node_modules/express/lib/router/index.js:286:9

at Function.process_params (/usr/local/lib/node_modules/n8n/node_modules/express/lib/router/index.js:346:12)

at next (/usr/local/lib/node_modules/n8n/node_modules/express/lib/router/index.js:280:10)

at compression (/usr/local/lib/node_modules/n8n/node_modules/compression/index.js:220:5)

at Layer.handle [as handle_request] (/usr/local/lib/node_modules/n8n/node_modules/express/lib/router/layer.js:95:5)

at trim_prefix (/usr/local/lib/node_modules/n8n/node_modules/express/lib/router/index.js:328:13)

at /usr/local/lib/node_modules/n8n/node_modules/express/lib/router/index.js:286:9

at Function.process_params (/usr/local/lib/node_modules/n8n/node_modules/express/lib/router/index.js:346:12)

at next (/usr/local/lib/node_modules/n8n/node_modules/express/lib/router/index.js:280:10)

at /usr/local/lib/node_modules/n8n/dist/src/Server.js:358:13

I guess there is a limit to the number of records the node can handle - possibly limited by host memory ? In this example, the workflow was throwing 9,786 records at the UPDATE node.

I am keen to know what the limit is or how I might be able to predict this limit so I can prevent / throttle the number of records presented to an UPDATE action on a SQL node.

Does this mean I should be setting N8N_PAYLOAD_SIZE_MAX to a bigger value (currently set to 16) ?

- n8n version: 0.197.1

- Database you’re using (default: SQLite): Postgres for the system but in this case, Microsoft SQL Server

- Running n8n via Docker