Ok, I’ve got myself a solution that works now - I’m sure there are quicker / more optimal ways of doing this, but this will do me for now, and it was a learning exercise too

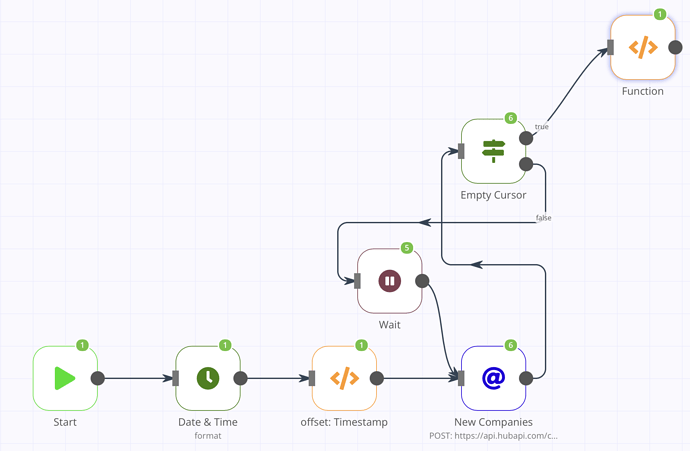

My aim was to setup a 1 way sync of new Companies created in Hubspot, to a staging table in our data warehouse (Postgres). To make this work in this workflow I’ve created an offsets table in the data warehouse to store a timestamp offset and a batch number.

The pagination works nicely now - I’m pulling 50 items per page when I search for new companies, and I’ve managed to combine the pages using a Function node (thanks @RicardoE105 , @jan for the inspiration in other forum posts).

Before I insert the items into the data warehouse, I also assign them a uuid, and I associate them with the batch number created earlier in the workflow (will help with data processing downstream).

There are a couple of points where I introduce waiting time so I avoid hitting Hubspot API limits (150 every 10 seconds on professional edition, lower on the entry level Hubspot, so I’ve gone for 100).

This data is going to be part of a data model build that uses dbt, so I’ve just inserted the Hubspot properties retrieved from the API into a single json column in the database (I’ll disassemble it later).

Now I’ve gone back over this I can see a couple of areas where there is room for improvement, especially when the workflow returns no items from the API - it just stops at the Combine Companies node, which still works, but I need to improve upon that in the future.

Anyway, I’ve learnt more about n8n in the process and I’ve got a working solution that with a few tweaks will get us synchronising hubspot entities to our data warehouse for use in real customer analytics.

I also learnt that when you see some workflows you want to try from the forums, you can simply copy them and then past them on to the workflow canvas! That has saved a lot of time!!!

![]() ).

).