Describe the problem/error/question

I have an error workflow associated to most of my workflows which posts errors to Slack for monitoring.

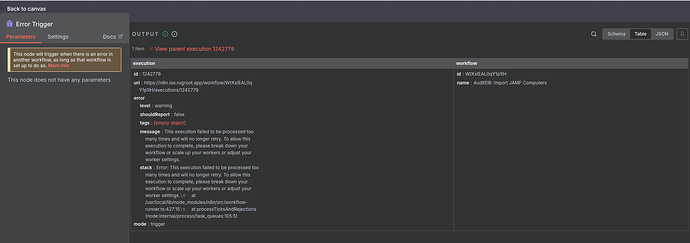

I have noticed that I have receiving daily and consistent errors from a handful of my workflows (Typically longer running workflows that take 15-30 minutes to complete) with the error message:

This execution failed to be processed too many times and will no longer retry. To allow this execution to complete, please break down your workflow or scale up your workers or adjust your worker settings.

This odd part about this is that each time I review, the workflow which triggered the error actually shows it completed successfully and in full. All execution data is available and appears perfectly healthy. I do not understand why the error workflow is being triggered if the execution is actually completing successfully and without error.

Here is an example of the Error Trigger received by the error workflow:

{

"execution": {

"id": "441814",

"url": "https://example.com/workflow/lTkzzQguBR2xYT04/executions/441814",

"error": {

"level": "warning",

"shouldReport": false,

"tags": {},

"message": "This execution failed to be processed too many times and will no longer retry. To allow this execution to complete, please break down your workflow or scale up your workers or adjust your worker settings.",

"stack": "Error: This execution failed to be processed too many times and will no longer retry. To allow this execution to complete, please break down your workflow or scale up your workers or adjust your worker settings.\n at /usr/local/lib/node_modules/n8n/dist/workflow-runner.js:277:29\n at processTicksAndRejections (node:internal/process/task_queues:95:5)"

},

"mode": "trigger"

},

"workflow": {

"id": "lTkzzQguBR2xYT04",

"name": "My workflow"

}

}

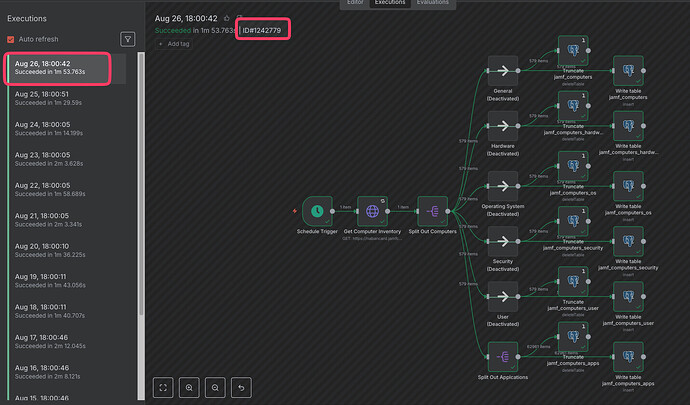

This is the execution data for the workflow which “Errored”

{

"id": "441814",

"finished": true,

"mode": "trigger",

"retryOf": null,

"retrySuccessId": null,

"status": "success",

"createdAt": "2025-03-14T07:00:27.015Z",

"startedAt": "2025-03-14T07:00:27.084Z",

"stoppedAt": "2025-03-14T07:33:49.086Z",

"deletedAt": null,

"workflowId": "lTkzzQguBR2xYT04",

"waitTill": null

}

This is the execution data for the triggered error workflow:

{

"id": "444657",

"finished": true,

"mode": "error",

"retryOf": null,

"retrySuccessId": null,

"status": "success",

"createdAt": "2025-03-14T07:33:32.932Z",

"startedAt": "2025-03-14T07:33:46.773Z",

"stoppedAt": "2025-03-14T07:33:48.420Z",

"deletedAt": null,

"workflowId": "E30aY2L8VcZP7pQX",

"waitTill": null

}

Information on your n8n setup

- n8n version: 1.82.1

- Database (default: SQLite): Postgres

- n8n EXECUTIONS_PROCESS setting (default: own, main):

- Running n8n via (Docker, npm, n8n cloud, desktop app): GCP cloudrun

- Operating system: