Good day everyone!

I came across an unobvious behavior in workflow, and I hope that someone will be able to suggest a solution.

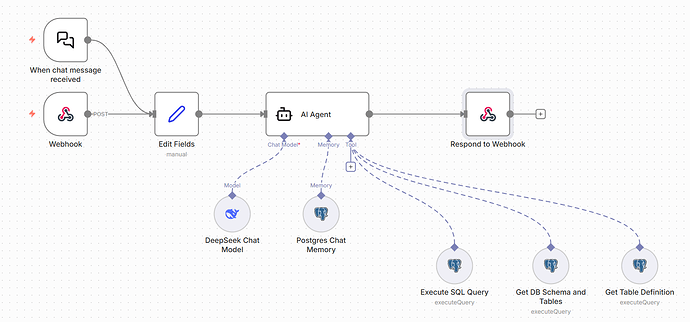

Configuration:

-

Workflow implements chat with a PostgreSQL table via text-to-SQL.

-

All control is delegated to the AI Agent, which chooses which tool to use.

-

Postgres nodes are used as tools (they execute SQL queries).

-

To keep the context between requests, I connected the Postgres Chat Memory node (the memory depth is the last 10 interactions).

Problem:

-

Everything works correctly without memory: the agent calls Postgres tools every time and returns accurate results.

-

With the memory enabled, the agent does “remember” the previous questions, but only during the first two clarifications.

-

Starting with the third request in the chain, the agent stops invoking tools and instead generates fictional responses (hallucinations).

Question:

What could be the reason for this behavior?

-

Perhaps the problem is context overflow (although the depth is only 10)?

-

Or does the agent “lose confidence” in the need to call tools as the length of the dialog increases?

-

Are there any recommendations for setting up memory or prompta for such scenarios?

Any ideas or links to the documentation are welcome!