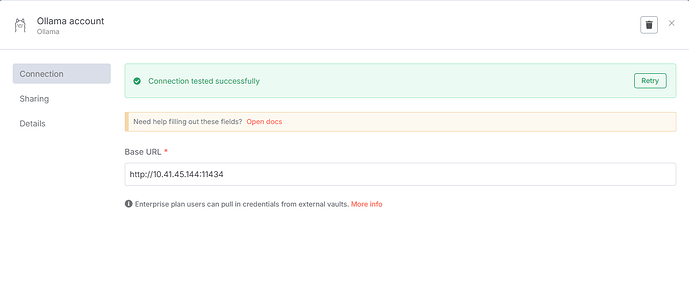

The workfow return error when using ollama local model,while online deepseek works good.

error message:fetch failed,

Please share your workflow

Share the output returned by the last node

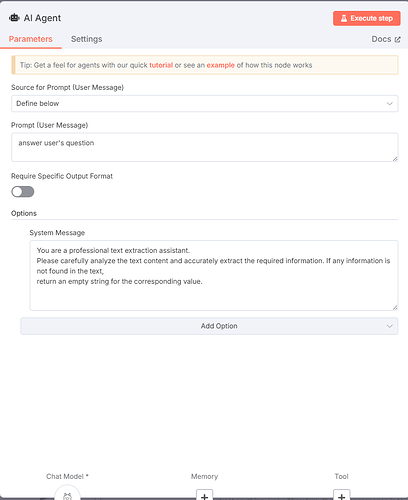

Error running node ‘AI Agent’

Stack trace

TypeError: fetch failed at node:internal/deps/undici/undici:13510:13 at post (/usr/local/lib/node_modules/n8n/node_modules/.pnpm/[email protected]/node_modules/ollama/dist/shared/ollama.9c897541.cjs:114:20) at Ollama.processStreamableRequest (/usr/local/lib/node_modules/n8n/node_modules/.pnpm/[email protected]/node_modules/ollama/dist/shared/ollama.9c897541.cjs:253:22) at ChatOllama._streamResponseChunks (/usr/local/lib/node_modules/n8n/node_modules/.pnpm/@[email protected]_@[email protected][email protected][email protected][email protected]__/node_modules/@langchain/ollama/dist/chat_models.cjs:735:32) at ChatOllama._streamIterator (/usr/local/lib/node_modules/n8n/node_modules/.pnpm/@[email protected][email protected][email protected][email protected]_/node_modules/@langchain/core/dist/language_models/chat_models.cjs:100:34) at ChatOllama.transform (/usr/local/lib/node_modules/n8n/node_modules/.pnpm/@[email protected][email protected][email protected][email protected]_/node_modules/@langchain/core/dist/runnables/base.cjs:402:9) at RunnableBinding.transform (/usr/local/lib/node_modules/n8n/node_modules/.pnpm/@[email protected][email protected][email protected][email protected]_/node_modules/@langchain/core/dist/runnables/base.cjs:912:9) at ToolCallingAgentOutputParser.transform (/usr/local/lib/node_modules/n8n/node_modules/.pnpm/@[email protected][email protected][email protected][email protected]_/node_modules/@langchain/core/dist/runnables/base.cjs:391:26) at RunnableSequence._streamIterator (/usr/local/lib/node_modules/n8n/node_modules/.pnpm/@[email protected][email protected][email protected][email protected]_/node_modules/@langchain/core/dist/runnables/base.cjs:1349:30) at RunnableSequence.transform (/usr/local/lib/node_modules/n8n/node_modules/.pnpm/@[email protected][email protected][email protected][email protected]_/node_modules/@langchain/core/dist/runnables/base.cjs:402:9)

Information on your n8n setup

- n8n version: 1.97.1

- Database (default: SQLite): postgres:13-alpine

- n8n EXECUTIONS_PROCESS setting (default: own, main):

- Running n8n via (Docker, npm, n8n cloud, desktop app): Docker

- Operating system: ubuntu22.04

- web page on : LAN windows10 edge