Hello,

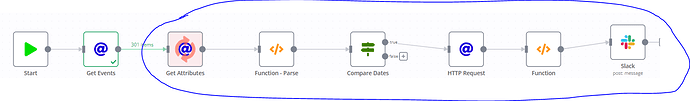

Just like the title says… I’m trying to run several nodes (the ones inside the blue area), for each item the first node returns:

The node returns me 301 events, and inside every event there are hundreds or thousands of attributes that I have to parse, and then depending on a given date i have to get more information and then run one last function. Finally there is a message that is sent via slack.

I know that maybe I’m able to do this with an auxiliary workflow and just call with the Workflow trigger, but i was trying to avoid this.

Is there another way?

Thank you. Kind regards,

Rob

Information about the n8n setup:

- n8n version:

-

Database: SQLite

-

Running n8n with the execution process: own(default)

-

Running n8n via: Docker

Hey @robjennings, hope you’re well?

The node returns me 301 events, and inside every event there are hundreds or thousands of attributes that I have to parse, and then depending on a given date i have to get more information and then run one last function. Finally there is a message that is sent via slack.

So your problem would be that you could end up with too much data in your workflow? If so, I think using a sub-workflow would indeed simplify things. It can definitely reduce the memory footprint of your workflow.

You wouldn’t typically use a Workflow Trigger for this though but a Split In Batches node combined with Execute Workflow node.

So your parent workflow could look like so:

The Split In Batches node ensures that only one dataset is passed on to your child workflow at a given time. In your child workflow you can then do the heavy lifting and only return a small manageable dataset. Once your child workflow finishes, execution is returned to the parent again.

1 Like

Will try that. Thank you very much.