Hey @First_Spark_Digital,

From your response, I am guessing you were able to mitigate the Timeout error but want to speed up the response times now.

Let me share my experience with this:

I can only make a set number of calls per minute for the workflows that I have set up and for the usage tier I have in OpenAI.

There are two ways (that I know of) to achieve it. One is to use the Wait node with the in-built OpenAI node that n8n has. It is easy to manage, and based on your account’s tier limits in OpenAI, you can time the requests accordingly if you have multiple inputs that need to be processed.

For example, I get at least 35 to 50 entries that must be sent to OpenAI to generate completion. I can send them in bulk, but sometimes, the token count is significant, and I also get an error. That is when I started using this node to split them into separate calls and make the flow wait a few seconds before hitting OpenAI, to avoid rate limit errors.

This has given consistent results of under 1 minute response times from OpenAI for each call. They often turn up in less than 40 seconds for 2K input tokens and 1K to 1.5K output tokens.

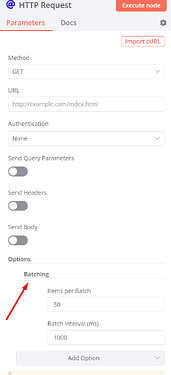

The other way is to use the HTTP Request node to handle the batching and delay.

To batch the inputs and delay each call:

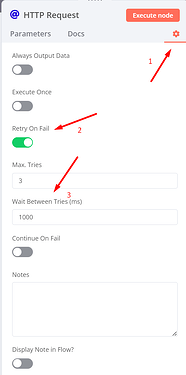

Adding a retry step just to be sure:

Also, I could see that batching the requests one at a time has solved the issue for the other user (post that you shared).

Maybe if you could share your workflow here, someone from n8n’s team can help further.