Yea thanks for all the help so far Jayavel. Much appreciated.

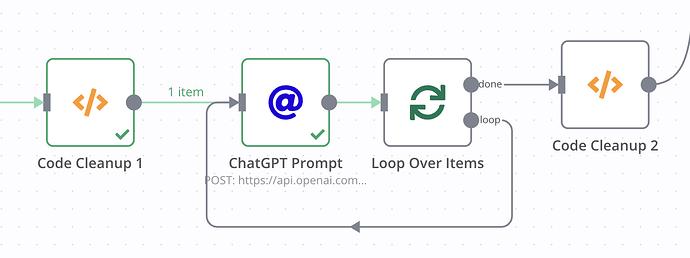

I think while the Loop Over Items helps the timeout, I’m not sure yet how it will work with the batching. Because in theory I’ll only have one request sent to OpenAI. If I get multiple hits to my website (intake form) then in theory the batching would work then I think.

{

“meta”: {

“instanceId”: “cec86eeddef9ce9510df5fa3d831594fc68385b4da5fcc5a2c862e33863b9ffe”

},

“nodes”: [

{

“parameters”: {

“method”: “POST”,

“url”: “https://api.openai.com/v1/chat/completions”,

“authentication”: “predefinedCredentialType”,

“nodeCredentialType”: “openAiApi”,

“sendBody”: true,

“specifyBody”: “json”,

“jsonBody”: “=”,

“options”: {}

},

“id”: “922f1a8e-096c-41a3-8d4b-a90057b0f22d”,

“name”: “ChatGPT Prompt”,

“type”: “n8n-nodes-base.httpRequest”,

“typeVersion”: 4.1,

“position”: [

2300,

580

],

“credentials”: {

“openAiApi”: {

“id”: “annZJgpkrYsgn9Y1”,

“name”: “OpenAi account”

}

}

},

{

“parameters”: {

“jsCode”: “let data = $input.all();\n\nconst removeProblematicCharacters = (text) => {\n return text.replace(/“|"|”/g, ‘\\"’);\n };\n\nlet result = ;\ndata.forEach((item, index) => {\n console.log("keys are ", item.json)\n\n let currentRes = {}\n for (let key in item.json) {\n let val = item.json[key];\n\n let sanitized;\n if (Array.isArray(val)) {\n let joined = val.join(" ");\n sanitized = removeProblematicCharacters(joined);\n } else {\n sanitized = removeProblematicCharacters(val);\n }\n \n currentRes[key] = sanitized\n }\n\n result.push(currentRes);\n});\n\nreturn result;”

},

“id”: “1e5347ff-52a3-4b53-b912-b4b462553441”,

“name”: “Code Cleanup 1”,

“type”: “n8n-nodes-base.code”,

“typeVersion”: 2,

“position”: [

1700,

300

]

},

{

“parameters”: {

“mode”: “runOnceForEachItem”,

“jsCode”: “\n// this is the gpt ‘content’ value from the response of the http-request node\nlet content = $input["item"]["json"]["choices"][0]["message"]["content"];\n\nreturn {\n "scores": JSON.parse(content)["scores"]\n };”

},

“id”: “d3318543-ca7b-4806-b2e7-d1294c55f8c8”,

“name”: “Code Cleanup 2”,

“type”: “n8n-nodes-base.code”,

“typeVersion”: 2,

“position”: [

2120,

280

]

},

{

“parameters”: {

“options”: {}

},

“id”: “9f055919-a712-496e-8106-30e7ee517c83”,

“name”: “Loop Over Items”,

“type”: “n8n-nodes-base.splitInBatches”,

“typeVersion”: 3,

“position”: [

1900,

300

]

},

{

“parameters”: {

“amount”: 3,

“unit”: “seconds”

},

“id”: “0987df1e-6451-4334-b78f-a55a386fd645”,

“name”: “Wait”,

“type”: “n8n-nodes-base.wait”,

“typeVersion”: 1,

“position”: [

2120,

460

],

“webhookId”: “ddb22e91-fbbe-4ffc-96ba-b093fb91f801”

}

],

“connections”: {

“ChatGPT Prompt”: {

“main”: [

[

{

“node”: “Loop Over Items”,

“type”: “main”,

“index”: 0

}

]

]

},

“Code Cleanup 1”: {

“main”: [

[

{

“node”: “Loop Over Items”,

“type”: “main”,

“index”: 0

}

]

]

},

“Loop Over Items”: {

“main”: [

[

{

“node”: “Code Cleanup 2”,

“type”: “main”,

“index”: 0

}

],

[

{

“node”: “Wait”,

“type”: “main”,

“index”: 0

}

]

]

},

“Wait”: {

“main”: [

[

{

“node”: “ChatGPT Prompt”,

“type”: “main”,

“index”: 0

}

]

]

}

}

}