Ruriko

April 7, 2025, 6:27am

1

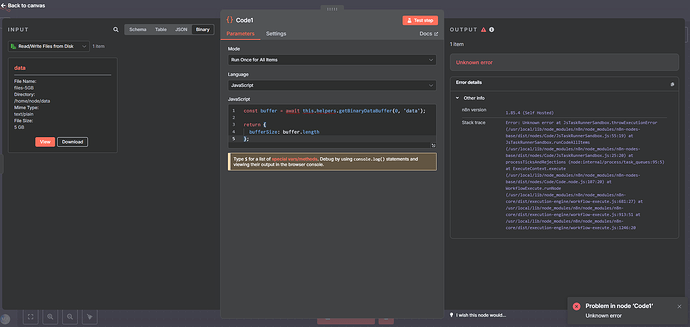

I’m trying to get the buffer size of a file but it’s unable to execute the code node if it’s larger than 2GB. I’ve already configured to filesystem set N8N_DEFAULT_BINARY_DATA_MODE=filesystem but error still popups. Uploading files larger than 2GB works using the http node works but reading buffer size doesn’t

File size (2179631187) is greater than 2 GiB null

**n8n version:**1.83.2

**Database (default: SQLite):**default

**n8n EXECUTIONS_PROCESS setting (default: own, main):**default

**Running n8n via (Docker, npm, n8n cloud, desktop app):**npm

**Operating system:**WIndows Server 2022

1 Like

Hi @Ruriko

Did you try increasing the default environment variabler N8N_PAYLOAD_SIZE_MAX ? Endpoints environment variables | n8n Docs

1 Like

@mohamed3nan doesn’t this only apply to webhooks etc? this is why it’s an endpoint ENV variable. since 16MB is the limit of the cloud instances.

@jcuypers I’m not entirely sure, to be honest, but just trying things out — trial and error helps find a starting point to debug the issue…

1 Like

There have been changes to different nodes in order to support >2GB. maybe they should make the same changes for this one?

Excellent @netroy i just tested it uploading a file of almost 3GB to Google Drive and it worked successfully:

[image]

Amazing work!

Note: yesterday I created a new question for a similar problem with AWS S3 node (upload feature), maybe same problem? Help, please:

Maybe you can use ‘read binary’ node?

He’s already using the Read node. The issue appears when he tries to get the buffer using the Code node, for further operations

const buffer = await this.helpers.getBinaryDataBuffer(0, 'data');

1 Like

Ruriko

April 7, 2025, 7:25am

7

I just tried and no it still won’t execute

I have seen some info in other topics regards :

N8N_RUNNERS_MAX_PAYLOAD

Number

1 073 741 824

Maximum payload size in bytes for communication between a task broker and a task runner.

N8N_RUNNERS_MAX_OLD_SPACE_SIZE

String

The --max-old-space-size option to use for a task runner (in MB). By default, Node.js will set this based on available memory.

not sure if it would help.

Ruriko

April 7, 2025, 7:57am

9

I tried set N8N_RUNNERS_MAX_PAYLOAD=4294967296 but still won’t get buffer size

not sure i tried to simulate and already get an error reading a 3GB file :

which translates around 500+MB.

Ruriko

April 7, 2025, 8:11am

11

For that read/write node error you have to set N8N_DEFAULT_BINARY_DATA_MODE=filesystem

1 Like

Okay, I replicated it with 5gb file and got an error

Stack trace:

Error: Unknown error at JsTaskRunnerSandbox.throwExecutionError (/usr/local/lib/node_modules/n8n/node_modules/n8n-nodes-base/dist/nodes/Code/JsTaskRunnerSandbox.js:55:19) at JsTaskRunnerSandbox.runCodeAllItems (/usr/local/lib/node_modules/n8n/node_modules/n8n-nodes-base/dist/nodes/Code/JsTaskRunnerSandbox.js:25:20) at processTicksAndRejections (node:internal/process/task_queues:95:5) at ExecuteContext.execute (/usr/local/lib/node_modules/n8n/node_modules/n8n-nodes-base/dist/nodes/Code/Code.node.js:107:20) at WorkflowExecute.runNode (/usr/local/lib/node_modules/n8n/node_modules/n8n-core/dist/execution-engine/workflow-execute.js:681:27) at /usr/local/lib/node_modules/n8n/node_modules/n8n-core/dist/execution-engine/workflow-execute.js:913:51 at /usr/local/lib/node_modules/n8n/node_modules/n8n-core/dist/execution-engine/workflow-execute.js:1246:20

also with 2.6GB file, same

–

I’m not sure if this is a bug we should report…

1 Like

Hi,

the problem stems from here. getasbuffer for filesystem uses std node readfile which supports a max of 2GB …

Indeed, a bug / feature request should be made I guess

reg,

async getAsBuffer(fileId: string) {

const filePath = this.resolvePath(fileId);

if (await doesNotExist(filePath)) {

throw new FileNotFoundError(filePath);

}

return await fs.readFile(filePath);

}

1 Like

How did you know there’s an explicit file size limit? I couldn’t find it in the code.

netroy

April 7, 2025, 9:09am

15

Is there a reason to read such large files into memory? could you use file streams instead?

1 Like

async getAsStream(fileId: string, chunkSize?: number) {

const filePath = this.resolvePath(fileId);

if (await doesNotExist(filePath)) {

throw new FileNotFoundError(filePath);

}

return createReadStream(filePath, { highWaterMark: chunkSize });

}

async getAsBuffer(fileId: string) {

const filePath = this.resolvePath(fileId);

if (await doesNotExist(filePath)) {

throw new FileNotFoundError(filePath);

}

return await fs.readFile(filePath);

}

async getMetadata(fileId: string): Promise<BinaryData.Metadata> {

searched in code and after that

is search good old google

opened 09:05AM - 15 Nov 24 UTC

fs

good first issue

### Version

v22.11.0

### Platform

```text

Darwin LAMS0127 23.6.0 Darwin Kerne… l Version 23.6.0: Thu Sep 12 23:36:23 PDT 2024; root:xnu-10063.141.1.701.1~1/RELEASE_ARM64_T6031 arm64 arm Darwin

```

### Subsystem

_No response_

### What steps will reproduce the bug?

```javascript

const fs = require("fs/promises");

const FILE = "test.bin";

async function main() {

const buffer1 = Buffer.alloc(3 * 1024 * 1024 * 1024);

await fs.writeFile(FILE, buffer1);

const buffer2 = await fs.readFile(FILE);

// does not reach here

console.log(buffer2.length);

}

main();

```

### How often does it reproduce? Is there a required condition?

It is deterministic.

### What is the expected behavior? Why is that the expected behavior?

readFile should allow for files as large as the max buffer size, as according to the documentation:

> RR_FS_FILE_TOO_LARGE[#](https://nodejs.org/api/errors.html#err_fs_file_too_large)

An attempt has been made to read a file whose size is larger than the maximum allowed size for a Buffer.

https://nodejs.org/api/errors.html#err_fs_file_too_large

In newer node versions, the maximum buffer has increased but the maximum file size is still capped at 2 GiB

In older versions (v18), the max buffer size on 64bit platforms was 4GB, but files cannot be that large either.

### What do you see instead?

`readFile` will throw the error

```

RangeError [ERR_FS_FILE_TOO_LARGE]: File size (3221225472) is greater than 2 GiB

```

### Additional information

_No response_

im not a AI cheater

Also there might already be a solution in n8n github code.

regards

1 Like

well we could but its the internal helper functions which use the fixed functions… and not the streams

In addition this is set:

- N8N_RUNNERS_ENABLED=true

- N8N_DEFAULT_BINARY_DATA_MODE=filesystem

- N8N_RUNNERS_MAX_PAYLOAD=4000000

- N8N_RUNNERS_MAX_OLD_SPACE_SIZE=4000

Hi @netroy ,

There’s a use case involving uploading large files to some providers that support it—with limits. Some of them allow chunked uploads, like 500MB per chunk.

For example, if I have a 3GB file, I want to read it and use the Code node to split it into chunks and continue the operations from there.

Also, what exactly did you mean by file streams ? Could you please clarify?

Ruriko

April 7, 2025, 1:04pm

19

So it’s considered a bug? How do I convert the file size to bytes given from the Read Files node?

I feel like many of us are working on similar workflows

By the way, the trick I found until this is solved or someone figures it out for us is that I used the ‘execute’ command to get the file size, followed by the code to parse the file size…