Hey there,

I’m running a self-hosted installation of n8n version 1.67.1 on Ubuntu 22.04 on DigitalOcean.

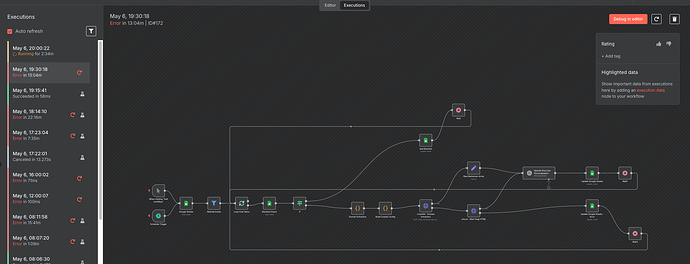

I’m experiencing errors in my workflow - I think those are related to a Craw4AI scraper that I’m using that generates 4MB - 8MB of data at a time

Issue details:

- When the workflow executes, it fails with errors (as seen in the execution history)

- One particular node in my workflow is processing large volumes of data

- When I click “Debug in editor” or try to view the execution details, I’m unable to identify where exactly the error is occurring

- The error seems to consistently happen at different time intervals (13:04m, 22:16m, 7:53s, etc.)

Questions:

- What are the best practices for troubleshooting n8n workflows that fail when handling large data volumes?

- Are there any specific logs I should check outside of the n8n interface?

- How can I identify memory or performance bottlenecks in my workflow?

- Are there recommended configurations or settings to optimize n8n for handling larger datasets?

- What information should I provide to help diagnose this issue?

I’ve attached a screenshot of my workflow and execution history for reference.

Any guidance would be greatly appreciated!