Please bear with me as this is my first time posting in the community.

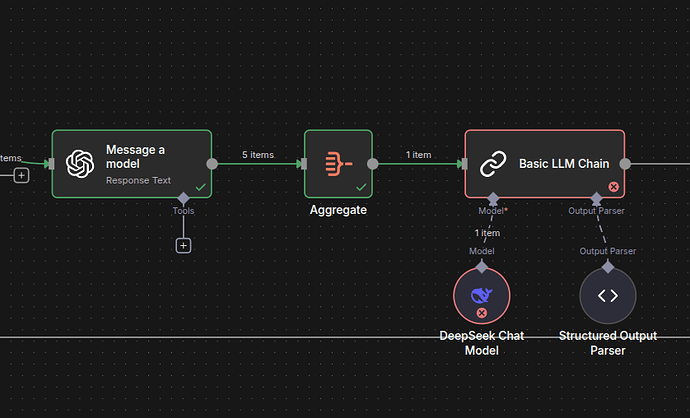

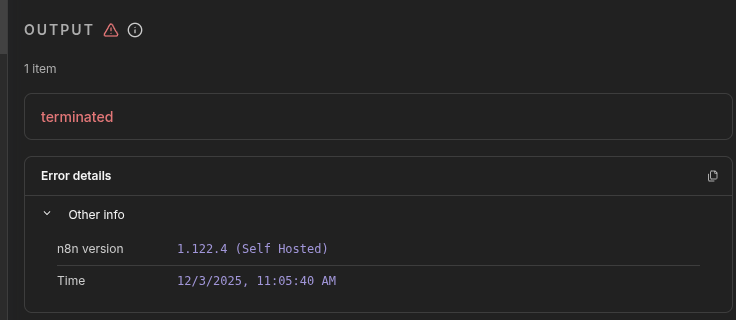

Anyways I keep running into this error “Problem in node ‘Basic LLM Chain‘ terminated“ when my aggregated text is going into my Deepseek LLM chain, 2/3 of my runs. No more details in logs.

I just started running into this error today and have made no changes, this workflow was working fine yesterday. I am using deepseek-reasoner.

Any solution?

1 Like

Hello and welcome to the community :),

First check that you have enough credit in your deepseek account. The terminate message suggest that’s not the case, but just to discard that possibility.

Then please try:

- Use another input, instead of the aggregate node try -for example- a chat input. Does it break this way?

- If it doesn’t: Could you provide an example of the output of the Aggregate node?

Thanks, it’s suddenly started working after a few days of this issue and I believe that it was an issue on deepseek’s part that they’ve fixed (at least for now). There were a few github issues that I think managed to get the error more clearly and explain the underlying issue DeepSeek Reasoner tool-use fails in n8n with 400 Missing reasoning_content (regression after DeepSeek V3.2) · Issue #22579 · n8n-io/n8n · GitHub

Hi

I don’t have the solution, but I’m getting the same error. I’ve been using DeepSeek for six months, like the model on n8n, and it’s never failed me before. But two days ago, it started giving me this error, and I suspect it’s related to the new DeepSeek 3.2 version. I just need to figure out why and test my theory.