We can now build our own MCP servers in N8N but why would you want to? I spent the last few weeks building some of my own and wanted to share my thoughts on MCP use-cases and why they might be the next best thing for AI agents.

Hey there ![]() I’m Jim and if you’ve enjoyed this article then please consider giving me a “like” and follow on Linkedin or X/Twitter. For more similar topics, check out my other AI posts in the forum.

I’m Jim and if you’ve enjoyed this article then please consider giving me a “like” and follow on Linkedin or X/Twitter. For more similar topics, check out my other AI posts in the forum.

With every new tech, I always try and distill how it benefits my clients and their businesses and whether or not they should consider adopting. This article is written with that perspective in mind so forgive me if these use-cases aren’t for you personally.

I set out to implement a few MCP servers in N8N and ended up with 9 examples in total. They are a mix of basic tools as showcased on the official MCP website and a few vendor examples seen in the wild. I’ve published them for free on my creator page so you can try them out using the links below.

- FileSystem MCP Build your own FileSystem MCP server | n8n workflow template

- PostgreSQL MCP Build your own PostgreSQL MCP server | n8n workflow template

- SQLite MCP Build your own SQLite MCP server | n8n workflow template

- Qdrant MCP Build your own Qdrant Vector Store MCP server | n8n workflow template

- Github MCP Build your own Github MCP server | n8n workflow template

- Youtube MCP Build your own Youtube MCP server | n8n workflow template

- Google Drive MCP Build your own Google Drive MCP server | n8n workflow template

- Custom API MCP Build your own CUSTOM API MCP server | n8n workflow template

- Dynamic N8N Workflows MCP https://n8n.io/workflows/3770

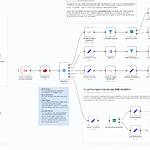

Some screenshots:

Learnings

After building these MCP server examples, here’s my currently understanding and learnings about MCP servers.

-

Bring Your Own Agent (BYOA)

MCP servers decouple tools from agents which is interesting from both provider and user perspectives. It becomes no longer necessary for service providers to build an agent for you and seeing how LLM usage is the biggest expense, this makes sense. Users can also finally have the option to switch to more powerful LLMs which companies typically restrict due to costs. -

Designing APIs for Agents

MCP represents a new trend I kinda suspected to happen eventually - we’re now designing interfaces for agents rather than humans! What’s the difference? Well for one, with agents you can afford to be a lot more verbose; you can send docs along with your API, expose 100s of tools at once and have any number of parameters. -

MCPs for Workflows, not just Tools

Designing agent tools for outcome rather than utility has been a long recommended practice of mine and it applies well when it comes to building MCP servers; In gist, we want agents to be making the least calls possible to complete a task. Tool design and architecture will be even more important for successful and maintainable servers.

Why N8N for MCP?

-

What makes n8n good for MCP servers?

Using n8n is probably one of the fastest ways to create customised MCP servers right now. If you consider the MCP server trigger is all you really need to have a deployed, production-ready SSE endpoint, compatible with all MCP clients and being able to attach around 267 tools out of the box, I’d say that is pretty hard to beat!The learning curve is thankfully minimal as if you’re familiar with the AI Agent node, you’ll feel right at home with attaching and using tools. If you want to get the most out of MCP servers however, there is a need to start learning about Subworkflows and the custom workflow tool. Using custom workflows will optimise your agent’s workflow which saves time and provides a better user experience.

-

But there’s more, n8n can actually be a good MCP client!

So whilst I was testing with Claude Desktop, I inevitably ran into Anthropic’s relatively low usage limits. It was wild to me that once you reached this limit, Claude Desktop basically became a dead app! Great thing then that n8n also is itself a MCP client and the more I used it the more you realise that this feature is so powerful. What you have is an MCP client which can use basically any LLM model (even locally hosted such as Ollama), easily add additional MCP servers or tools without restarting and which if you choose, integrate into an n8n workflow.What perhaps would be kinda cool is if we could see similar Claude Desktop-like Chat interfaces components where the available tools are displayed for the user.

-

Why not just use official vendor MCP servers?

Vendor MCP servers are great but I’ve found them to come with many limitations. Let’s ignore the fact that most of them use the STDIO entrypoint for a moment which may turn off a lot of non devs. Feature-wise, I’ve found these MCP implementations to be either incredibly light (Qdrant) or the extreme opposite being too heavy (Github). Credentials for these servers also need to be exposed often which is clunky. Building your own is probably the only way you can get that balance for what you or your organisation needs.

Use Cases

-

Distributing your Service via MCP and Let Users Choose their own Agents

As mentioned, this decoupling of agent and tools has a key benefit in reducing your own LLM costs by delegating this to the customer. Although the trade-off is that if the customer’s LLM is bad, it may affect the perceived quality and performance of your product. I could see another benefit being customers who are conscious about where their data is going - by allowing them to own and control the agent part, this could possibly alleviate those concerns and improve adoption. -

Distributing Specialised MCPs for Teams

Likewise, building specialised, shared MCP servers for teams would be a great use-case. This would bring increased flexibility to MCP clients which can be used eg. Developers may prefer an IDE/Code editor whilst non-technicals may prefer Claude Desktop. Some which I can think of are knowledge-based MCPs and for internal HR processes such as requesting holiday.

Best Practices

It may be too soon to be establishing best practices for building MCP servers but I believe a lot of what I learned building regular agent tools applies quite easily. I suspect not everyone will agree so I encourage you all to challenge me on this! Leave a comment below to what you would change or think I’ve missed.

-

Be Goals-oriented and not Utility-oriented

I still firmly believe this to be true for architecting MCPs; tools designed around a goal such as “send invoice” do much better than just attaching a service like “gmail” and hope the agent knows what to do with it. This way the agent is more likely to use to correct tool at the correct time and with less iterations. -

Prefer Parameterised Tool Inputs

Such as in my PostgreSQL example, I was not really comfortable allowing the agent to provide raw SQL statements though I was sure it could definitely do a great job. My concern is one of lack of trust that the agent won’t suddenly feel like performing operations beyond its expected scope. Eg. Could I coax the agent to deleting an entire table? Parameterising your tool input would be (I hope!) a defence against this. -

Always use Auth with your MCP server

As we grant our agents more access and permissions to our most sensitive data, it becomes increasingly important to ensure these privileges are not abused. If you are realising any kind of MCP server to the public, always be vigilant and make sure you know who’s connecting to your system. -

Consider Versioning Your MCP Server

Like your APIs, your MCP servers will likely need to change and adapt as your organisation and service grows. You may need to add new tools but simultaneously remove old ones which may disrupt or break workflows for existing users. One approach would be to consider adding simple versioning to your MCP server URLs such as with APIs eg./mcp/v1/postgreSQL. This way, you can develop and release/mcp/v2to newer users and allow a migration path for older.

Conclusion

I’ve quite enjoyed this project exploring MCPs and how to implement them in n8n. I’ve found the n8n team to have done a great job in keeping it simple and hope they’ll keep iterating on this feature with all the feedback they’re getting from the community. Ultimately, I feel MCPs won’t be for everyone but n8n makes them relatively inexpensive to test and try with internal teams as well as customers so why not? If you’ve made your own MCP server, I’d love to hear what you’re using it for and what experience you’ve had so far.

if you’ve enjoyed this article then please consider giving me a “like” and follow on Linkedin or X/Twitter. For more similar topics, check out my other AI posts in the forum.

Still not signed up to n8n cloud? Support me by using my n8n affliate link.

Need more AI templates? Check out my Creator Hub for more free n8n x AI templates - you can import these directly into your instance!