Hi, I am currently making a solution that requires scalability, due to the nature of the work and the amount of data involved, I had to split the data into batches, after that I had to split it into multiple workflows.

Some of these workflows require fetching the data from external APIs, using pagination and batch processing. However, in order to avoid the rate limitations and failures I have to add delays and other nodes that require additional working time.

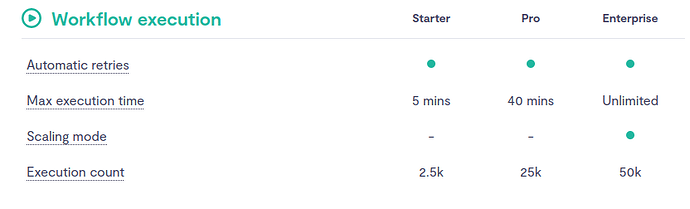

My major concern is, that at times the workflows can run up to an hour, while other times they might fail immediately, due to a memory heap issue or other errors.

I tried setting up a timeout on the cloud, but it seems like I am only allowed to set a timeout for a maximum of 3 minutes. So even if I try and set a workflow to run after every three minutes, the total monthly execution can go as high as 14400, which can be costly.

Is there a way to understand how much time it takes for the workflow to timeout/how much memory can one workflow hold before timing out or failing?

I can not find a way to change ENV_VARIABLES on cloud.