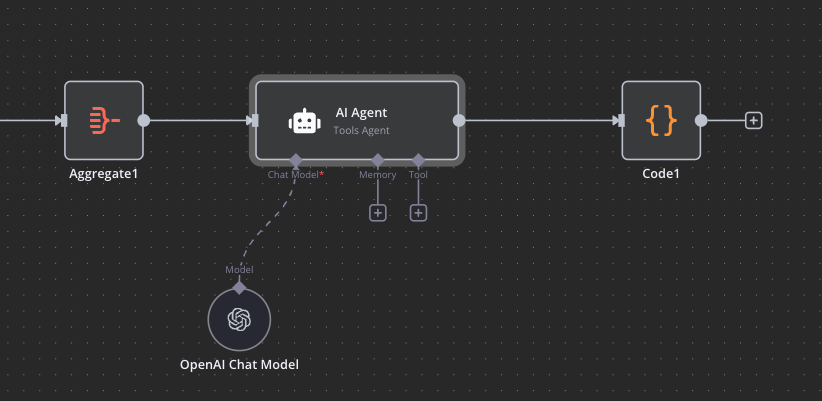

I am using the AI Agent in n8n to analyze and categorize error data, but the execution time is significantly slow. Sometimes, it takes over 2 minutes to process a relatively small dataset of 5-10 entries, which feels excessive.

I expected a much faster response time (ideally under 20 seconds), given that the dataset is small. Even after trying different batch sizes, the AI Agent still runs slowly.

Below is a fake dataset (not my real data) that mimics my use case and workflow:

Input:

[

{

"title": "Printed Brochures",

"data": [

{

"id": "error-abc123",

"job": "job-111111",

"comment": "Brochures arrived with torn pages."

},

{

"id": "error-def456",

"job": "job-222222",

"comment": "Pages were printed in the wrong order."

},

{

"id": "error-ghi789",

"job": "job-333333",

"comment": "The brochure cover was printed in the wrong color."

}

]

},

{

"title": "Flyers",

"data": [

{

"id": "error-jkl987",

"job": "job-444444",

"comment": "Flyers were misaligned during printing."

},

{

"id": "error-mno654",

"job": "job-555555",

"comment": "Flyers were cut incorrectly, leaving uneven edges."

},

{

"id": "error-pqr321",

"job": "job-666666",

"comment": "The wrong font was used in the printed text."

}

]

}

]

Output:

[

{

"title": "Printed Brochures",

"errors": [

{

"errorType": "Physical Damage",

"summary": "Brochures arrived with torn or damaged pages.",

"job": ["job-111111"],

"error": ["error-abc123"]

},

{

"errorType": "Print Order Issue",

"summary": "Pages were printed in an incorrect sequence.",

"job": ["job-222222"],

"error": ["error-def456"]

},

{

"errorType": "Color Mismatch",

"summary": "The cover was printed in the wrong color.",

"job": ["job-333333"],

"error": ["error-ghi789"]

}

]

},

{

"title": "Flyers",

"errors": [

{

"errorType": "Print Alignment Issue",

"summary": "Flyers were misaligned during the printing process.",

"job": ["job-444444"],

"error": ["error-jkl987"]

},

{

"errorType": "Cutting Issue",

"summary": "Flyers were cut incorrectly, resulting in uneven edges.",

"job": ["job-555555"],

"error": ["error-mno654"]

},

{

"errorType": "Font Error",

"summary": "Incorrect font was used in the printed text.",

"job": ["job-666666"],

"error": ["error-pqr321"]

}

]

}

]

Prompt Used in the AI Agent Node in n8n

You are an advanced data analysis and error categorization AI. Your task is to process input data containing error details grouped by “title”. Each group contains multiple error objects. Your goal is to identify unique error types from the comment field, generate concise summaries for each error type, and include relevant job and error values for those errors.

Input Fields:

- title: The product group title.

- data: An array of objects with the following fields:

- id: Unique identifier for the error.

- job: ID of the job.

- comment: Description of the issue.

Objective:

- Group errors by title.

- Analyze the comment field to identify unique error types.

- Generate meaningful summaries for each error type without repeating the comments verbatim.

- Include all relevant job and error values for each error type.

Output Format:

[

{

"title": "ProductGroupTitle",

"errors": [

{

"errorType": "Error Type",

"summary": "Detailed summary of the issue.",

"job": ["RelevantJobId1", "RelevantJobId2"],

"error": ["RelevantErrorId1", "RelevantErrorId2"]

}

]

}

]

Guidelines:

- Extract and group data by title.

- Identify error types from the comment field.

- Write concise, non-redundant summaries for each error type.

- Include only relevant job and error values for each error type.

- Return a clean, well-structured JSON array without additional text.

Input for Analysis: {{ JSON.stringify($input.all()[0].json.data) }}

Information on your n8n setup

- n8n version: 1.63.4

- Database (default: SQLite): cloud

- n8n EXECUTIONS_PROCESS setting (default: own, main): cloud

- Running n8n via (Docker, npm, n8n cloud, desktop app): cloud

- Operating system: cloud