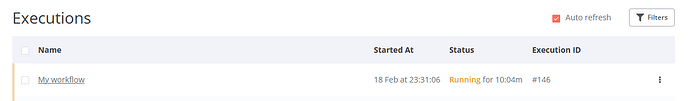

I have a self hosted instance of n8n and the below very simple workflow. For some reason the “test workflow” button works as expected, but when I enable the workflow, the resulting executions never finish running.

I’m quite new to n8n, so I’d appreciate any advice on where to start debugging.

Nothing suspicious on stdout/stderr.

N/A

n8n version: 1.25.1Database (default: SQLite): PostgreSQLn8n EXECUTIONS_PROCESS setting (default: own, main): EXECUTIONS_MODE=queueRunning n8n via (Docker, npm, n8n cloud, desktop app): dockerOperating system: TrueNAS-SCALE-23.10.0.1 (Debian GNU/Linux 12)

2024-02-20 10:35:29.371213-08:002024-02-20T18:35:29.370Z | e[32minfoe[39m | e[32m - "My workflow" (ID: 73KqfNlOEPzxMfow)e[39m "{ file: 'ActiveWorkflowRunner.js', function: 'add' }"

2024-02-20 10:35:29.371515-08:002024-02-20T18:35:29.371Z | e[34mdebuge[39m | e[34mInitializing active workflow "My workflow" (ID: 73KqfNlOEPzxMfow) (startup)e[39m "{\n workflowName: 'My workflow',\n workflowId: '73KqfNlOEPzxMfow',\n file: 'ActiveWorkflowRunner.js',\n function: 'add'\n}"

2024-02-20 10:35:29.378034-08:002024-02-20T18:35:29.377Z | e[34mdebuge[39m | e[34mAdding triggers and pollers for workflow "My workflow" (ID: 73KqfNlOEPzxMfow)e[39m "{ file: 'ActiveWorkflowRunner.js', function: 'addTriggersAndPollers' }"

2024-02-20 10:35:29.381017-08:002024-02-20T18:35:29.380Z | e[36mverbosee[39m | e[36mWorkflow "My workflow" (ID: 73KqfNlOEPzxMfow) activatede[39m "{\n workflowId: '73KqfNlOEPzxMfow',\n workflowName: 'My workflow',\n file: 'ActiveWorkflowRunner.js',\n function: 'addTriggersAndPollers'\n}"

2024-02-20 10:35:36.394435-08:002024-02-20T18:35:36.394Z [Rudder] debug: in flush

2024-02-20 10:35:36.394507-08:002024-02-20T18:35:36.394Z [Rudder] debug: cancelling existing flushTimer...

2024-02-20 10:35:48.013493-08:002024-02-20T18:35:48.011Z | e[34mdebuge[39m | e[34mWait tracker querying database for waiting executionse[39m "{ file: 'WaitTracker.js', function: 'getWaitingExecutions' }"

2024-02-20 10:36:29.401422-08:002024-02-20T18:36:29.400Z | e[34mdebuge[39m | e[34mReceived trigger for workflow "My workflow"e[39m "{ file: 'ActiveWorkflowRunner.js', function: 'returnFunctions.emit' }"

2024-02-20 10:36:29.498151-08:00Started with job ID: 1 (Execution ID: 150)

2024-02-20 10:36:48.013534-08:002024-02-20T18:36:48.012Z | e[34mdebuge[39m | e[34mWait tracker querying database for waiting executionse[39m "{ file: 'WaitTracker.js', function: 'getWaitingExecutions' }"

2024-02-20 10:37:09.349978-08:002024-02-20T18:37:09.349Z | e[36mverbosee[39m | e[36mSuccessfully deactivated workflow "73KqfNlOEPzxMfow"e[39m "{\n workflowId: '73KqfNlOEPzxMfow',\n file: 'ActiveWorkflowRunner.js',\n function: 'remove'\n}"

2024-02-20 10:37:09.379366-08:002024-02-20T18:37:09.378Z [Rudder] debug: no existing flush timer, creating new one

2024-02-20 10:37:19.380777-08:002024-02-20T18:37:19.379Z [Rudder] debug: in flush

2024-02-20 10:37:19.381051-08:002024-02-20T18:37:19.380Z [Rudder] debug: cancelling existing flushTimer...

2024-02-20 10:37:48.014112-08:002024-02-20T18:37:48.013Z | e[34mdebuge[39m | e[34mWait tracker querying database for waiting executionse[39m "{ file: 'WaitTracker.js', function: 'getWaitingExecutions' }"

2024-02-20 10:38:48.015209-08:002024-02-20T18:38:48.014Z | e[34mdebuge[39m | e[34mWait tracker querying database for waiting executionse[39m "{ file: 'WaitTracker.js', function: 'getWaitingExecutions' }"

Turned on debug logging and here is the log (execution 150 never finished).

Hi @yichi-yang

Do you have your workers up and running, and can they communicate with both Redis and your database?

1 Like

You are right! I’m using the helm chart maintained by the TrueNAS community. It seems like it never starts the worker processes. I’ll file a bug report there.

1 Like

system

March 1, 2024, 8:04am

5

This topic was automatically closed 7 days after the last reply. New replies are no longer allowed.