The idea is:

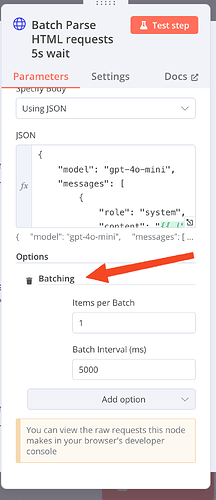

Add Batching to OpenAI Node similar to what the HTTP Request node offers.

HTTP request node supports batching, which is very helpful to avoid hitting API rate limits. Batch size, intervals is baked into the HTTP request node, so I assume it can easily be added to the OpenAI node as well.

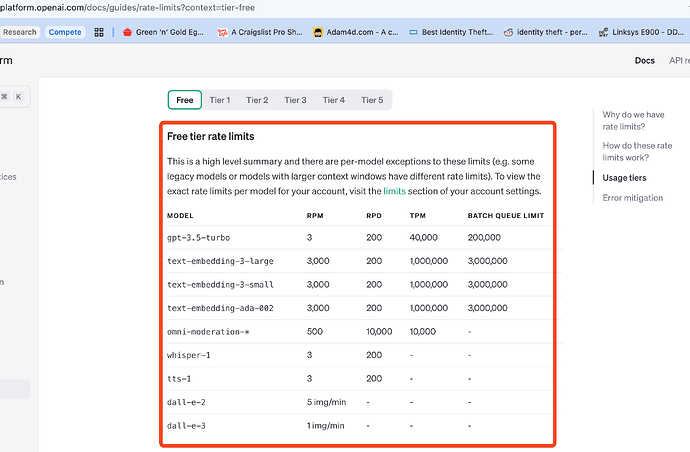

OpenAI API has rate limits on their API and it makes sense that the OpenAI node would allow me to batch requests and set timeout interval.

My use case:

I am calling OpenAI API to process chat completions. In doing so, I have run into API rate limit multiple times.

I think it would be beneficial to add this because:

OpenAI API has a high adoption rate and I only suspect more users will be using it and running into this issue.

Are you willing to work on this?

yes, but need help setting up n8n dev env.

- List item