The idea is:

Allow the Open AI node in chat mode to accept a dynamic list of user/assistant prompts.

My use case:

I have a dynamic list of messages that I have to enter in the Open AI Chat API (either GPT 3.5 or GPT 4). For example:

[

{ "role": "system", "content": "You are a helpful assistant." },

{ "role": "user", "content": "Who won the soccer world cup in 2018?" },

{

"role": "assistant",

"content": "The 2018 FIFA World Cup was won by the French national football team."

},

{ "role": "user", "content": "Where was it played?" }

]

The number of messages is not fixed, it may vary over time.

I think it would be beneficial to add this because:

It’s the way the Chat API work. You need to provide all the previous user/assistant messages.

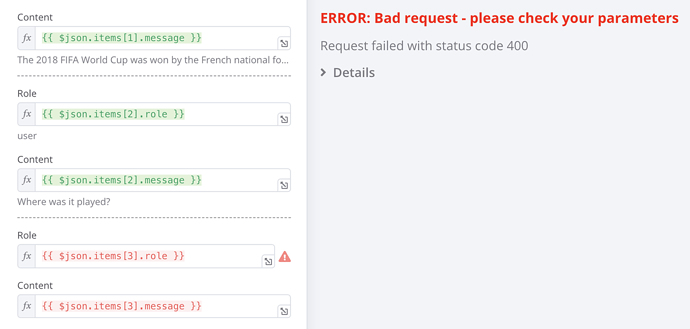

I tried doing so with the provided input fields, but if the dynamic list doesn’t match the number of fields, it throws an error, so it’s not possible:

Any resources to support this?

I opened it as a question before doing the feature request: Use a dynamic list as prompts in OpenAI API

Are you willing to work on this?

No at this moment.