Can’t use OpenAI’s Deep Research because it costs $200/month? No fret, I got you covered with this bootleg version you can use for pennies!

Hey there ![]() I’m Jim and if you’ve enjoyed this article then please consider giving me a “like” and follow on Linkedin or X/Twitter. For more similar topics, check out my other AI posts in the forum.

I’m Jim and if you’ve enjoyed this article then please consider giving me a “like” and follow on Linkedin or X/Twitter. For more similar topics, check out my other AI posts in the forum.

About a week ago, OpenAI announced Deep Research, an agentic solution designed to excel at complex research tasks and perform better and faster than humans. All the more exciting is it’s powered by OpenAI’s newest model series, o3, which boasts incredible benchmark scores beating even GPT4. The only problem is that at time of writing, you’re forced to be on OpenAI’s $200/month pro subscription to use it.

Bummer! Well, not if the open-source community has anything to say about it! Just days soon after the annoucement, HuggingFace and others were already publishing their own version of DeepResearch for free! I spent a day or two reading the various code repositories to learn the ins-and-outs and realised it was very plausible to build this all in n8n!

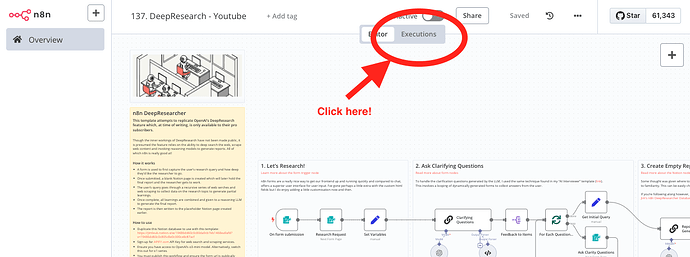

So I got to work building my own Deep Research in n8n based off David Zhang’s implementation - much easier than starting from scratch! - and after spending just a few hours, I was able to complete a working draft! I spent another day improving it to include features such as a form interface and the ability to upload the final reports to Notion.

Check out the sample research reports here - Jim’s DeepResearcher Reports

Check out the sample research reports here - Jim’s DeepResearcher Reports

The Stack

OpenAI o3-mini for LLM (OpenAI.com)

I’ve been consistently impressed with reasoning models so far and this kind of workflow is probably one of the best use-cases for them. O3-mini is working really well and the price makes it an easy swap if you’re currently using o1-mini for similar templates. Note at time of writing, you need to be on usage tier 3 or above - simplest way is to top up about $100 into your OpenAI account, otherwise, feel free to swap this out for Google 2.0 Thinking or DeepSeek R1.

Apify for SERP & Web Scraping (Apify.com)

Unlike the reference implementation, I decided to go with my currently preferred web scraping service, Apify.com, instead. It’s just a lot cheaper than Firecrawl.ai or SerpAPI.com and there’s no arbitrary monthly usage limits which would hinder the usefulness of this workflow.

NEW: I’ve updated the template to use Apify’s new RAG Web Browser which gives the workflow a nice boost - initially testing sees each webscraping process reduced by 3-5mins. thanks @jancurn !

Have your own favourite? Share in the comments below!

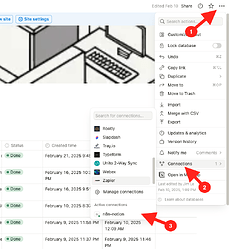

Notion for Reports (Notion.io)

I wasn’t convinced that a chat interface was the best for reports so opted to use a wiki-like tool such as Notion. This turned out great and better suited for long form content plus it allowed for past reports to be shareable, editable and searchable. The only pain was uploading the generated markdown report and I settled on a solution to convert the markdown to HTML and then HTML to Notion Blocks using an Gemini…

Gemini 2.0 Flash for Markdown to Notion Conversion (Gemini)

Sometimes you just need to use the right model for the task and in this instance, Gemini 2.0 just works better for conversion tasks in my experience. Note, if you’re not using notion to store reports, feel free to swap this out.

N8N for everything else! (n8n.io)

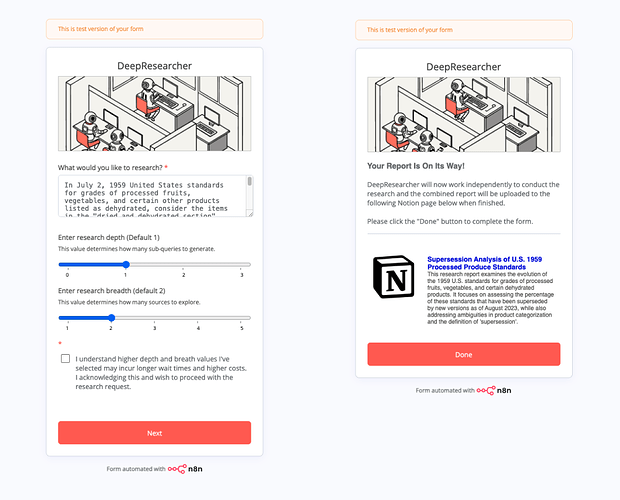

I’m loving the new updates to n8n’s forms and now that you can do all sorts with custom HTML fields, they can make for really cool frontends! For the template, I built a simple series of custom forms to initiate the deep research (see screenshot).

As for the rest, it was actually quite easy to map real code with various n8n nodes making the process quite easy to understand. Of course, I’m also taking full advantage of sub-workflows as the help with managing performance.

How it works

Deep Search via Recursive Web Search+Scraping

This Deep Research approach works very much like a human researcher - starting from a single search query, it can generate subqueries based on new information it receives to search again. This cycle can continue until enough data is collected to generate the full report. The more cycles performed, the better the information collected and thus the better the report produced - however, it’s takes much longer to complete. Humans with our limited context spans, will tire very quickly but automating with AI can go much further - this is Deep Research!

In code, this idea can be represented as a recursive loop and the challenge was implementing it in n8n which is built to be mostly linear. I actually figured out the technique a while ago when I was working on a template for AI content generation and here’s a quick explaination I wrote earlier of the same technique.

Once implemented, it’s just then a case of making the “depth” and “breadth” variables adjustable to allow the user to balance the number of cycles, cost and time they have available for their research.

Report generation using Reasoning Models

If you’re still reading up on Reasoning models, check out this X/Twitter thread from the OpenAI team about their o-series models. To paraphrase, reasoning models use a multi-step planning approach which excel at figuring out unstructured data and generating longer, more detailed outputs. Perfect for research!

In the template, I’ve stuck with a minimal prompts in the AI nodes but believe they are far from optimised for every research use-case. If you do decide to use this workflow, definitely learn more about reasoning models and update the prompts accordingly!

How To Use

- Follow the setup instructions contained within the template: Ensure you have access to o3-mini, Apify and Notion database is setup.

- This template is designed to be initiated via its form so either manually trigger or activate the template to make it publically available.

- From the form, you can enter your research topic and configure 2 parameters - depth and breadth - which determine how “deep” you want the research to go.

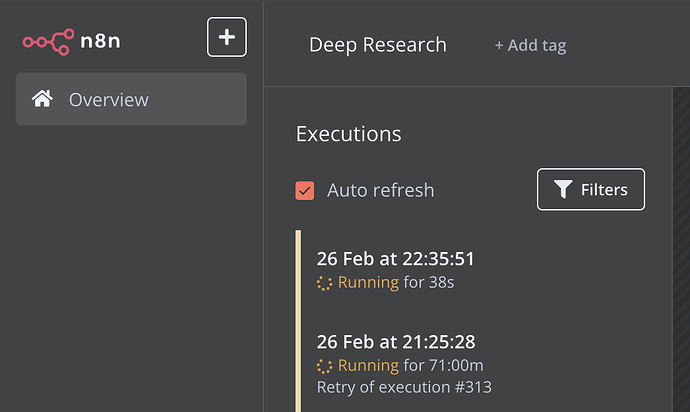

- This is not a quick workflow! With the default depth=1/breadth=2 settings, executions are expected to take at least 10 minutes - this will get you about 20 sources (ie. scraped webpages) . If you want more sources increase either the depth or breadth or both, but be extra sure because it could take a very long time to complete.

- Sit back and relax… Seriously, go make a cup of tea and come back later! The DeepResearch is designed to work idependently without human supervision.

- Finally, check the Notion page created for your research topic. The status will changed to done when the final report is uploaded.

If you’re having trouble, let me know in the comments.

The Template

I had a lot of fun building this template and will be releasing it for free in my n8n creator page. Please have a go and leave a comment with your thoughts and feedback. Did I misunderstand anything about Deep Research? How would you improve this template?

![]() Requires n8n @ 1.77.0+

Requires n8n @ 1.77.0+

old version: n8n_deepresearch_community_share.json - Google Drive

Conclusion

It’ll always be interesting to me how we’ll keep discovering new ways of improving our use of LLMs using the most fundamental of methods. Credit to OpenAI for sparking this community effort and to David Zhang (@dzhng) for open-sourcing his reference implementation - I hope I did it justice!

if you’ve enjoyed this article then please consider giving me a “like” and follow on Linkedin or X/Twitter. For more similar topics, check out my other AI posts in the forum.

Still not signed up to n8n cloud? Support me by using my n8n affliate link. Disclaimer, this workflow does not work in n8n cloud just yet!

Need more AI templates? Check out my Creator Hub for more free n8n x AI templates - you can import these directly into your instance!