Is there some support to deploy workflows as a package? With CI/CD? Together with its secrets, that may possibly (preferrably) be defined in the environment?

Sadly not sure I understand what you mean but you can reference environment variables with $evn.VARIABLE_NAME in both expressions and Function-Nodes.

So currently, the model as I understand it is;

- run your designer / n8n UI, build your workflow

- add secrets, they are added to the database as cipher text using config’s key

- test it, done with it

- save to json file

- commit json file to git / source control

- upon deployment, manually go in an replace existing workflows by overwriting from the git-versioned workflow-export (the json file)

- further, manually insert the secrets into the secrets database, so they are re-encrypted using the production key

A more database-centric app, Hasura does it like this Using Hasura GraphQL Engine with a CI/CD system — running migrations in your continuous delivery pipeline. Migrations are automatically versioned on disk. With hasura console, they get written to the local file system as you edit your data model; a very powerful way of creating a diff. With n8n you have to manually perform an export/import and even then there’s no “migration” or “upgrade” API for the workflows that can be called during the CD step.

Finally, in our deployment, we used k8s Sealed Secrets from bitnami. It’s a very convenient model as it allows as offline encryption (and decryption if you have access to the sealer’s private key), and lets developer one-way-encrypt secrets and place them in git. These are normally attached as env vars or put as files on disk at runtime (which the other thread I created was about; how to load these secrets).

So to summarise, I’m looking for a way to automate the deployment of changes to workflows.

+1 on CICD. Let me explain

To use any kind of software in the enterprise, you need a repeatable way of doing tasks. Doing everything via the UI is problematic because there is no version control and the release process can be a big mess.

If all of these integrations can be expressed in some dsl/json and deployed via some api/sdk call, then we can store them in git and use our cicd system (jenkins, gitlab, github, circleci, etc) to create a release process around them.

Hope this makes sense.

Any recent updates on deployment flow?

We are using a different approach. Instead of deploying workflows using CI/CD, we are implementing the workflows using the UI and automatically backing up the workflows every X minutes with a Github backup workflow similar to this one: https://n8n.io/workflows/817

The goal is the same: a control versioning for the n8n workflows changes.

Hope this help you!

We do something as follows:

each employee works locally, with docker-compose, on boot it imports all secrets and workflows from git (mounted as a volume)

once done, the user exports the flow he’s been working on and commit to branch.

once a PR is opened we run a CI tests.

once approved and merged to master, on-commit to master hook will be send to n8n and n8n will start deploying the changes on itself ![]()

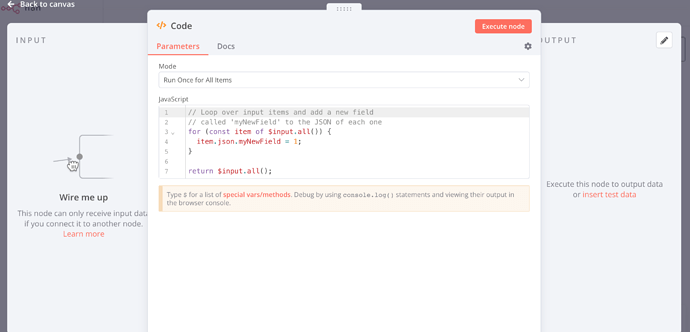

How does your employee work with the Code node?

He writes code right in the n8n UI, or do you have any better setup for that?

I’m not sure i understand your question,

The employees design their flows locally on their machine, export their flow id to a json (with cli), when pushed to master git sends a web-hook to n8n that deploys that flow

I’m asking about the case, when its needed to write some code for the Code node.

Is there any setup for that, or your teammates just write down the code in the n8n UI.

Here are my thoughts on that

I’ve written a custom file-based Node now that works well with deployment scripts. It’s just a modified code node that reads from this. By doing it this way, you can use Kustomize or ConfigMaps or anything else you might want to use to place a file in at a path configured in the workflow and have it be executed. I’m happy to sell a non-exclusive license + source code, at-cost (8 hours à 100 EUR) if anybody is interested.

I’ve shared a simple solution for deployment: Syncing local n8n workflows with remote host

Its pretty basic, but works fine for me.

We are currently working on new features to improve the workflows lifecycle management (multi environment, version control, CI/CD integration).

If some of you have some time to spare, it would be very valuable to chat to get an in-depth understanding of your needs and confront our initial ideas.

You can book a slot here or just drop me a message ![]()

@romain-n8n thanks for soliciting feedback.

My objective is fairly basic at this point so please let me know if that’s of interest to you to setup a call.

- n8n is in very active development.

- I want to get the latest/greatest version to get new features and get rid of bugs.

- However, I dont want to ruin whats working in my currently deployed version with newly introduced bugs or breaking changes. (happens despite our best efforts).

What I want is to have at least 2 instances of n8n - prod and staging/dev and using environment variables (easiest option) be able to “test” out the latest version in staging and when satisfied it doesnt break anything, upgrade the production version.

Moving workflows between instances is amazingly easy using just a sqlite copy or export/import cli - so while we can do better, it works for now.

Thanks for the insights! This is very much on point since we are currently contemplating two approaches (not mutually exclusive):

- Have several environments within a single instance (and seamlessly promote changes in a Workflow from one to another)

- Make it easier for integrators to maintain autonomous instances for each of their dev environment, but give them tools to easily and confidently promote/sync workflows between them

The first one obviously has the advantage of simplicity and consistency for integrators but do not let them easily test n8n version bumping to mitigate bugs or breaking changes in production.

Anyway, there are some common requirements for both those approaches:

-make the workflows as “stateless” as possible, by leveraging env variables

- having smart matching strategies to manage relationship between workflows & sub-worklows or to de-deduplicate webhooks endpoints

- potentially incorporating a version control system (built-in or leveraging the git ecosystem).

@romain-n8n thanks.

Let me tell you what is missing for the simple scenario I outlined of 2 separate instances - which I feel is better for DR purposes - ie have 2 instances in 2 datacenters is better than all eggs in one instance (mixing metaphors).

I am easily able to just copy over the sqlite db and make a new docker instance and change the env variables and bring up the new instance - works perfectly.

BUT

Since each workflow can do all kinds of things - ie some may make credit card charges, delete database entries, make important transactions, I dont really want them to be all active the moment I bring up the new docker instance. That is what happens today.

I also do not want to have them all disabled on startup, because if you have 100 workflows in production and 10 of them are disabled in production, it is not possible to see which was enabled and which was disabled.

Also in the UI you cannot make so many changes in the staging as soon as it comes up because the process is manual and has to be done for each workflow.

We can use tags to categorize, but it still takes time and for triggered/scheduled workflows, they may get triggered before you manually disable them. ie a job that runs every minute will run before you can disable it.

Also, when I did this last, I have some Stripe integration and as soon as the staging docker came up, it created duplicate webhooks in stripe for everything pointing to the staging environment, which I didnt want and then had to manually delete. Again - not a good thing from a transactional perspective.

Potential solution - allow flexibility and mass enable or disable workflows on startup.

ie if I tagged some workflows that should not automatically come up on startup, that would be a quick solution (not the most elegant but quick).

Then if we had the ability to multi-select workflows (after a filter or for all workflows) and mass enable or disable them, that would provide even more flexibility and speed in quickly disabling or reenabling 100 workflows.

ie I could filter by tag scheduled (ie ones that have a cron or schedule) and then mass disable them.

If all this could be programmatic then it would be perfect and lend to automation.

Happy to add more detail if you need it

Interesting “limitation” indeed, and it makes total sense to have a different “workflow enablement” policy across different environments (staging/test workflows have usually more a transitory nature when prod workflows stability is key).

I wonder if a workflow level settings such as “force enable on import” or "“force disable on import”; that would be set with an environment variable could be a proper solution (in this case the behaviour could differ for a same workflow based on the context).

@romain-n8n - yes that setting would work BUT there has to be corresponding UI changes where you can bulk select workflows and mark them.

This bulk select in the UI is anyway essential to bulk activate/deactivate/tag workflows.

Similar to bulk-selection of executions that can then be deleted, but it needs to be a bit more flexible.

ie the UI should allow filter by tag (which is does today) and then allow bulk-select of these filtered records and marking them active/inactive/tag/force_enable_on_import/force_disbable_on_import type operations.

Not sure if the Stripe issue can be solved by marking them inactive and if that design pattern is followed for other nodes or if it is a one time usage for Stripe.