Hi,

We are testing HTTP Node and node is launching all requests concurrently. This can exceed IP rate limit easily for any API.

An option to define concurrency limit could be useful to avoid this kind of problems.

Could you add this option to HTTP node?

Thanks!

I reply myself.

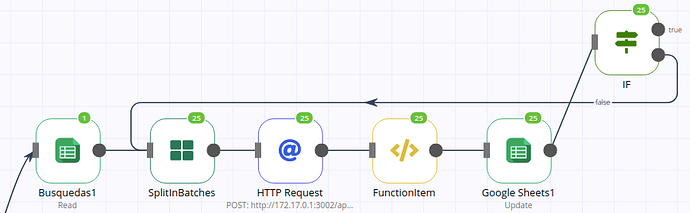

You can put a SplitInBatches node before any request and defining the required batch number, depending on the service concurrency limit per user account. Working task looks this way:

Instead of keep pinging the endpoint continuously in the loop, I suggest you add a wait time before each request.

You can use the Function Node to achieve. This will add 2 Second wait time before each request.

The Function Node should be right before the HTTP Request Node. In case you want to add extra time simply edit const waitTimeSeconds = NUMBER; with your desired choice of number.

const waitTimeSeconds = 2;

return new Promise((resolve) => {

setTimeout(() => {

resolve(items);

}, waitTimeSeconds * 1000);

});

Here 2msx1000 = 2 Seconds Wait time

2 Likes

Thanks @mcnaveen.

Nice idea!

2 Likes

You’re welcome  Let’s grow together.

Let’s grow together.

1 Like