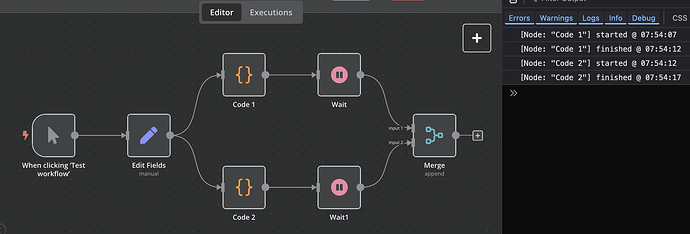

First, I understand that there is a switch to the “Legacy” (v0) execution order, instead of the “Recommended” (v1), default option. Also, as I understand it, that would change the way the entire workflow behaves for all forks in the flow.

I may be reading between the lines regarding the reason for the forked path in the original question, where one path is “Process Info 1”, and the other is “Process Info 2.” I guess I assumed there could be an Info 3, Info 4, etc. in the form input, but that the example had been simplified to focus on whether the paths actually run at the same time. So, yes, switching to the old/previous execution order does fix that simplified case.

Regarding how to literally interpret the term “parallel,” I think it depends on the use case whether it is interpreted as concurrently instead of “during the same section of the overall flow, but single-threaded/one-at-a-time, without changing the execution order setting.” IMO, most people interpret “parallel” as the first thing… “everything runs at once.”

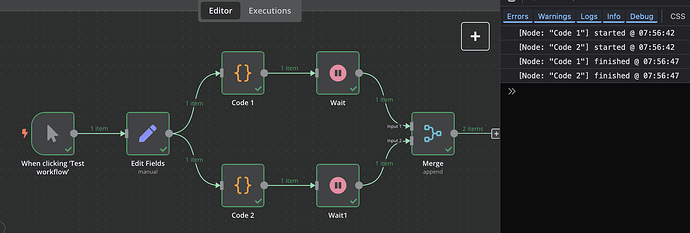

So, if you actually want an arbitrary number of sub-workflows to run at the same time, you need to do something different (and unfortunately, in a more complicated way.) Your workflow demo/example (in v0 execution order mode) is fine as long as you know ahead of time exactly how many forks (logic branches) you need. You also have to be ok with the semi-hidden, non-default behavior change in the workflow settings.

Like I said before, the complexity is only warranted if you actually need things to run in parallel, AND you need to apply intentional logic regarding how many of, or when all-of, the sub-workflows have completed.

One example of where in-series wouldn’t work is if you needed at least 3 approvals from a (dynamically sized) list of “on-duty managers” to proceed. Sending the approval requests out one at a time, would prolong, or likely completely block the process. Without truly parallel operation in the workflow, you’d have to implement some kind of external service to handle the “first 3 of ?? approval responses” logic.

Another example is making multiple calls out to a service that takes a significant amount of time to complete. The use-case that inspired this approach for me was creation of multiple compute resources (virtual machines), but not always the same count. Waiting for each one to be provisioned and configured (sometimes 10+ minutes) was unacceptable when they were requested one by one (time stacked). Nothing else we tried would make all of the “create vm” requests at once, and still wait for all of them to finish before continuing.

It would be really nice if n8n had something like a Join Group node and a corresponding option in the Wait node to Wait for Group but as far as I can tell, it does not yet have such a thing.

I also have some scenarios where changing to the v0 execution order would probably be a simpler approach. However, it does make me nervous that something marked “Legacy” next to something marked “Recommended” might disappear in an upcoming release.