Hey! I have a strange problem with my n8n setup. In general it pull data from http, splits with function to list of items and then for each item it should:

- query db with item

- create new record in DB if no record exists

Output from my function node shows 10 items and thats ok,

But then after pg execute query and if-node I got only one result.

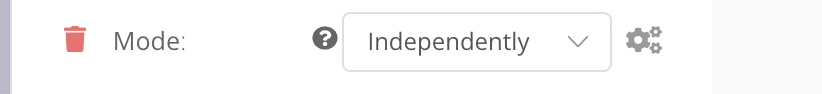

How to properly iterate over each item and not only first?