Background

For my use case, I require maintaining a low workflow “scaffolding” overhead (i.e. empty webhook trigger + response time), while scaling to a high volume (potentially hundreds of simultaneous workflow executions).

Based on other forum posts (e.g. API Response Performance) and my own personal testing of bare bones webhook workflows individually / in series, the n8n scaffolding appears sufficiently performant - anywhere from the ~20ms to 50ms avg response time range.

My challenge is this: how can I maintain this response time while increasing the volume of simultaneous / parallel requests by leveraging n8n scaling functionality / queue mode (or any additional techniques)?

In my current testing / configuration, I am not able to achieve the desired performance, and in fact, notice some counterintuitive behavior. In testing, I see significant performance increases when scaling from simply [1 worker + 1 webhook processor] up to around [10 workers + 10 webhook processors]. But even in this configuration, I am not able to get to nearly the level of mean response time as I would see when testing individually / in series (I typically test with 100 simultaneous requests).

What is even more interesting, is that as I scale beyond the ~10X range (I tested all the way up to [50 workers + 50 webhook processors]), performance flatlines or even worsens a bit, with mean response time starting to increase a little.

This is counterintuitive to me, as I would expect that if I was testing 100 requests in parallel, as I scaled up towards a maximum of 100 workers / webhook processors, I should be seeing the same performance as in series.

I am using AWS ECS Fargate to launch services with target number of tasks to manipulate quantities of worker / webhook instances. (Also, I have confirmed in the logs that tasks are properly getting distributed across the worker / webhook instances such that they are all being leveraged)

Questions

-Are my requirements feasible for n8n to begin with?

-What potential bottlenecks could be limiting performance and what could I do to remedy?

-Any other ideas to meet my performance goals?

Please share your workflow

My testing workflow is simply two nodes: webhook trigger + respond to webhook.

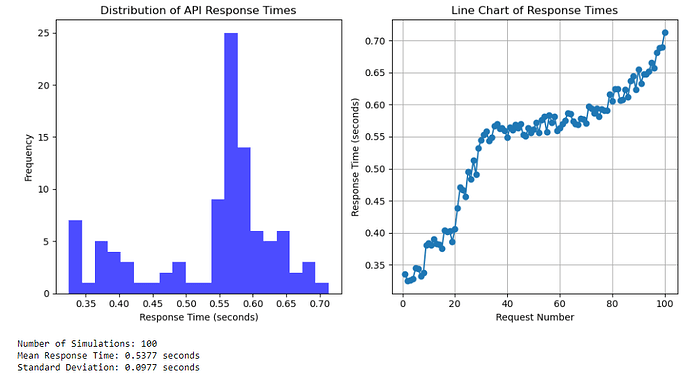

And FYI, here are some results from my testing:

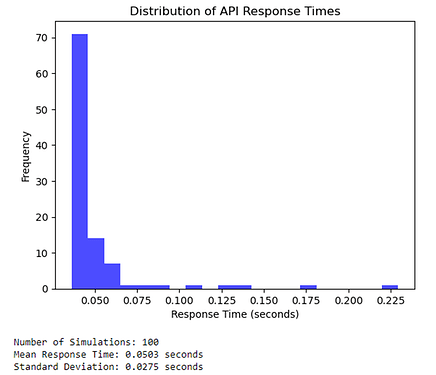

100 requests in series:

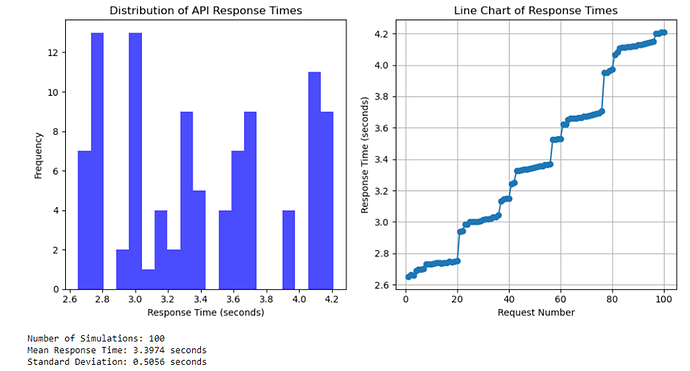

100 requests in parallel - 1 worker + 1 webhook processor:

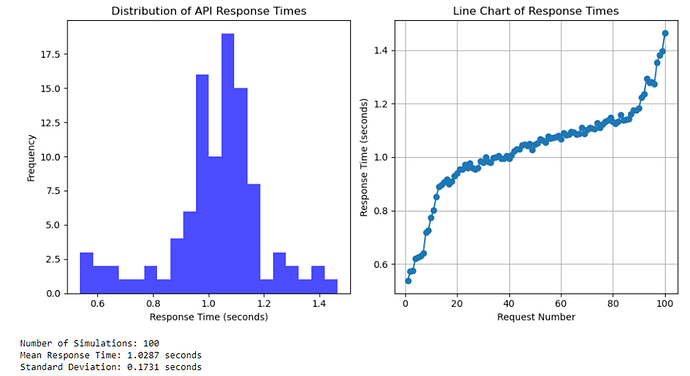

100 requests in parallel - 10 workers + 10 webhook processors:

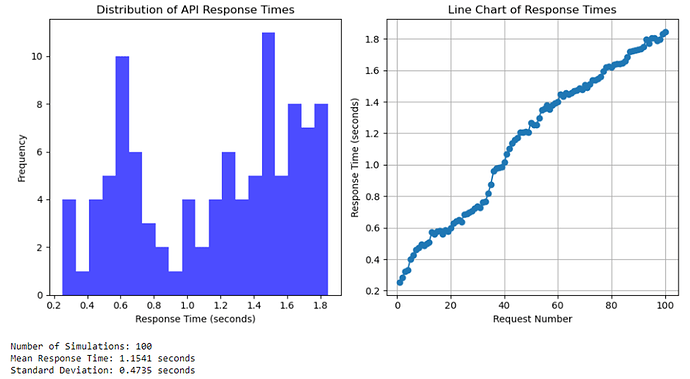

100 requests in parallel - 50 workers + 50 webhook processors:

Information on your n8n setup

- n8n version: 1.18.0

- Database (default: SQLite): Postgres

- n8n EXECUTIONS_PROCESS setting (default: own, main): queue

- Running n8n via (Docker, npm, n8n cloud, desktop app): Docker-image based self hosted setup using AWS ECS Fargate

- Operating system: Linux