The idea is:

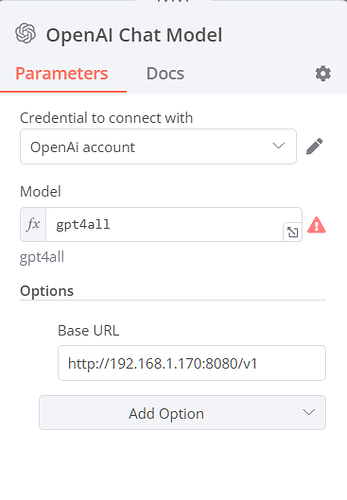

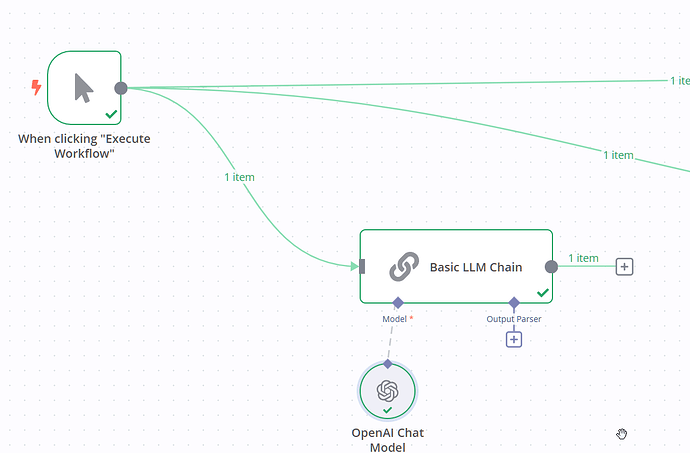

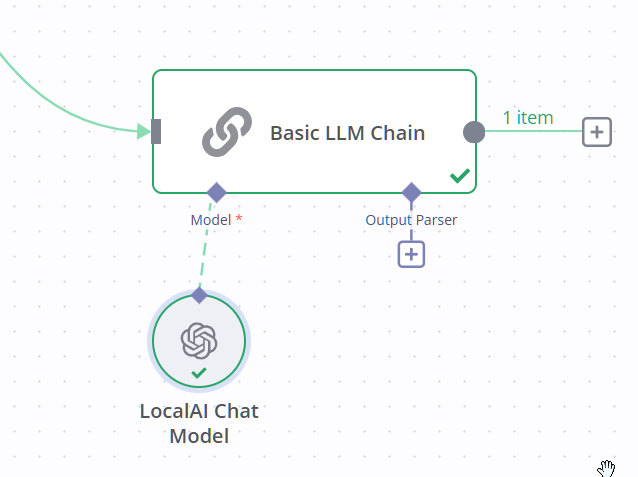

LocalAI is a project to run locally LLM and expose an API compatible with OpenAI API. The implementation can be to have a new LocalAI node that would be almost exactly the same as the OpenAI ones, or to add a “OPENAI endpoint” set to default to OpenAI uri and allow the user to change it to some other URI (like the localAI hosted locally)

My use case:

I would like to run LLM locally using LocalAI which has a lot of LLM engine backends

I think it would be beneficial to add this because:

It is an alternative to Ollama models that support a variety of other LLMs.

Any resources to support this?

https://localai.io/ has plenty of documentation

Are you willing to work on this?

Sorry, I don’t have enough time.